The Entropy of Truth: Why Information Escapes the Vault and Dies in the Woods

Introduction: The Illusion of Containment

In the age of digital omnipresence, we are told that encryption, firewalls, and access controls can lock away truth. Governments promise secure databases; corporations swear by end-to-end encryption; institutions claim their secrets are “protected.” Yet history is littered with the corpses of vaults---Wikipedia’s leaks, Snowden’s disclosures, the Panama Papers, the Facebook-Cambridge Analytica scandal---all proving that information wants to be free. But freedom, in this context, is not liberation---it is annihilation.

This document introduces Narrative Entropy: the principle that information, like energy in a closed system, inevitably leaks from any structure designed to contain it. Yet unlike physical entropy, which merely disperses matter and energy uniformly, narrative entropy ensures that once information escapes its vault, it does not arrive at clarity---it is immediately devoured by the dense, self-serving forest of human narrative. Truth does not survive in the wild; it withers under the canopy of interpretation, spin, and motive.

To the Luddite---the skeptic of rapid technological change---this is not a failure of technology, but an inevitability of human nature. We build systems to control information because we fear its consequences. But we forget: the real threat is not the leak, but what happens after.

The Physics of Information: Entropy as a Universal Law

Thermodynamic Entropy and Its Metaphorical Extension

Entropy, in thermodynamics, is the measure of disorder or randomness in a system. The Second Law states that over time, isolated systems tend toward maximum entropy---energy disperses, structures decay. Information theory, pioneered by Claude Shannon in 1948, extended this concept: information entropy quantifies uncertainty in a message. The more unpredictable the content, the higher its entropy.

Shannon’s Entropy Formula:

Where is the entropy of a discrete random variable with possible values and probability mass function

This mathematical foundation reveals a truth: information resists containment. Just as heat flows from hot to cold, information flows from restricted to accessible. Encryption is not a wall---it’s a dam. And dams, no matter how well engineered, eventually fail under pressure.

Historical Precedents: The Inevitability of Leaks

- The Pentagon Papers (1971): Daniel Ellsberg leaked classified documents revealing U.S. government deception about the Vietnam War. Despite military encryption and physical security, the truth escaped via a human agent with moral conviction.

- The Venona Project (1940s--1980s): The U.S. decrypted Soviet cables, but the Soviets never knew their messages were compromised---until decades later. The leak was not from a system failure, but from time.

- The 2017 Equifax Breach: A single unpatched Apache Struts vulnerability exposed 147 million records. No nation-state actor needed; just a forgotten line of code.

These are not anomalies---they are exemplars. Every system, no matter how robust, contains a point of failure. Not because it is poorly designed, but because all systems are finite. Entropy does not care about your budget or your CISO’s credentials.

The Human Vector: Biological Tells and Cognitive Leaks

Non-Verbal Communication as Information Leakage

Humans are walking leak detectors. Even in the absence of digital tools, we betray truth through:

- Microexpressions: Paul Ekman’s research shows facial muscle movements lasting 1/25th of a second reveal concealed emotions.

- Voice Pitch and Speech Disfluencies: Stress-induced vocal tremors, hesitations, or pitch spikes correlate with deception (see The Truth About Lying, 2016).

- Physiological Responses: Galvanic skin response, pupil dilation, and heart rate variability are measurable indicators of cognitive load during deception.

These biological signals form the original leak vector. Before firewalls, before encryption---humans leaked truth through their bodies. Modern technology merely amplifies this ancient vulnerability.

The Psychology of Secrecy: Cognitive Dissonance and the Burden of Lies

When an individual or institution holds a secret, they must maintain a narrative. This requires constant cognitive effort: remembering what was said, who knows what, and how to deflect. The psychological toll is immense.

Cognitive Dissonance Theory (Festinger, 1957): When a person holds two conflicting beliefs---e.g., “I am honest” and “I am hiding the truth”---they experience discomfort. To reduce it, they either change their behavior or distort reality.

Thus, secrecy is not passive---it is active deception. And active deception requires narrative scaffolding. The more tightly a secret is held, the more elaborate its supporting fiction becomes. And when that fiction cracks? The truth doesn’t emerge cleanly---it explodes into a thousand contradictory versions.

Narrative Entropy: When Truth Escapes and Dies

The Paradox of Disclosure

We assume that if information is leaked, truth will prevail. But history proves otherwise.

- The 2013 Snowden Leaks: Revealed mass surveillance by the NSA. Public outrage followed---but within months, narratives shifted: “It’s for security,” “You have nothing to hide,” “The leaks were exaggerated.” The truth was not denied---it was drowned.

- The 2018 #MeToo Movement: Thousands of testimonies exposed systemic sexual abuse. Yet powerful figures responded with “he said, she said” framing, victim-blaming, and legal intimidation. The truth didn’t vanish---it was recontextualized into ambiguity.

- Climate Science Denial: Decades of peer-reviewed data have been leaked, published, and disseminated. Yet denial persists---not because the data is hidden, but because narratives of economic fear and ideological identity override empirical reality.

Narrative entropy posits:

Truth leaks. But narrative consumes truth faster than truth can spread.

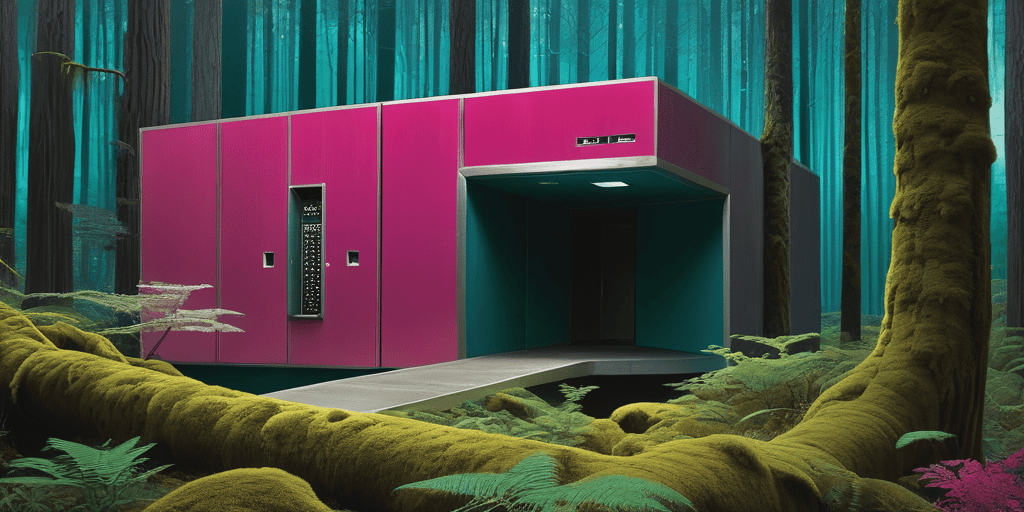

The Forest Analogy: Sapling in the Shade

Imagine a sapling---truth---growing in a dense forest. The trees are narratives: corporate PR, political spin, media sensationalism, algorithmic echo chambers, confirmation bias. The sapling needs sunlight---clarity, evidence, context---to survive.

But the canopy is thick. The trees grow faster. Their roots choke the soil. Their leaves block the sun.

The sapling doesn’t die from a storm. It dies from lack of light.

This is the core insight: Information leakage does not equal truth liberation. It equals narrative saturation.

Historical Precedents: The Long Arc of Information Control

Ancient Censorship and the Birth of Narrative Engineering

- The Library of Alexandria: Not destroyed by fire alone, but by selective preservation. What was copied? What was discarded? The victors wrote the histories.

- The Roman Censors: Officials who controlled public morality and records. They didn’t erase facts---they redefined them.

- The Printing Press (1440): Initially hailed as a democratizer of knowledge. Yet within decades, states and churches developed censorship regimes, propaganda pamphlets, and early forms of “fake news.”

The pattern is clear: Every new information medium is met with a corresponding narrative countermeasure.

The 20th Century: From Censorship to Public Relations

- Edward Bernays (1920s): The “father of public relations,” nephew of Freud, weaponized psychology to shape perception. His work for the American Tobacco Company convinced women to smoke “torches of freedom”---turning a health hazard into a feminist symbol.

- The U.S. Office of War Information (1942--1945): Coordinated propaganda to unify public opinion during WWII. Truth was not hidden---it was curated.

- The 1980s “Spin Doctors”: Political consultants began treating truth as a variable to be manipulated, not a constant to be revealed.

We did not invent narrative control. We perfected it.

The Digital Age: Amplification, Not Invention

Algorithmic Narrative Engineering

Modern platforms do not merely leak information---they amplify it through:

- Attention Economics: Algorithms prioritize engagement over accuracy. Outrage > truth.

- Filter Bubbles and Echo Chambers: Personalized feeds reinforce existing beliefs, making contradictory truths seem alien.

- Deepfakes and Synthetic Media: AI-generated audio/video creates plausible deniability. “That video is fake” becomes a shield for the guilty.

Example: In 2021, a deepfake video of Ukrainian President Zelensky “surrendering” circulated widely. It was debunked within hours---but not before 2 million views and dozens of news outlets reported it as “unverified.” The damage was done.

The Death of the Source

In pre-digital eras, truth had a source: a document, a witness, a letter. Today, truth has no origin. It is reposted, remixed, memed, and monetized. The source becomes irrelevant.

- The “Anonymous” leak: Who is Anonymous? No one knows. The truth they release is stripped of context, attribution, and accountability.

- Wikipedia’s “edit wars”: Even open-source knowledge is subject to ideological sabotage. Truth is not neutral---it’s contested.

The digital age doesn’t make truth harder to find. It makes it impossible to trust.

The Luddite Critique: Why Technology Is Not the Problem

Misplaced Blame: The Myth of Technological Determinism

Tech-skeptics are often dismissed as Luddites---anti-progress, anti-innovation. But the true Luddite does not fear machines. The true Luddite fears humanity’s surrender of judgment to systems.

We blame encryption for leaks. We blame AI for misinformation. But the real culprit is the human desire to control perception.

- The NSA didn’t leak because of bad code---it leaked because humans wanted to know.

- Facebook doesn’t spread lies---it amplifies them because engagement = profit.

- The pharmaceutical industry doesn’t hide data---it frames it.

Technology is the scalpel. Human narrative is the hand that wields it---and often, the hand that stabs.

The False Promise of “Transparency”

Governments and corporations now tout “transparency initiatives.” Open data portals. Real-time dashboards. Public audits.

But transparency without context is theater.

Example: The U.S. Department of Defense publishes “open data” on military spending---but the categories are deliberately vague (“unspecified operational costs”). The data is accessible. The meaning is obscured.

Transparency, when weaponized, becomes a tool of narrative control. You are given the data---but not the framework to interpret it. The truth is visible, but incomprehensible.

Ethical and Societal Implications: Truth as a Public Good Under Siege

The Erosion of Epistemic Authority

When truth is constantly leaked and distorted, institutions lose credibility. Not because they lie---but because no one believes anything anymore.

- Vaccine Hesitancy: Despite overwhelming scientific consensus, distrust in institutions has turned medical facts into political opinions.

- Climate Change Denial: 97% of climate scientists agree. Yet public perception remains divided---not because the data is hidden, but because narratives of economic fear dominate.

This is epistemic erosion: the slow collapse of shared reality. When truth leaks but cannot survive, society loses its compass.

The Moral Hazard of Surveillance

We are told surveillance protects us. But surveillance doesn’t prevent leaks---it creates them. The more you monitor, the more people feel compelled to expose.

- Whistleblowers: Snowden, Manning, Assange---they did not act out of malice. They acted because they believed the truth must be told, even if it would die.

- Corporate Whistleblowers: The 2015 Volkswagen emissions scandal was exposed not by regulators, but by an engineer who uploaded data to the EPA.

Surveillance doesn’t stop truth---it forces it into the open, where narrative entropy can devour it.

Counterarguments and Rebuttals

“If We Just Build Better Encryption, We Can Stop Leaks”

Rebuttal: Encryption protects data in transit and at rest. It does not protect against:

- Insider threats (employees, contractors)

- Social engineering (phishing, manipulation)

- Legal coercion (subpoenas, national security letters)

Even quantum encryption cannot prevent a human from taking a photo of a screen with their phone.

Analogy: A vault can be made impregnable---but if the guard is bribed, or forgets to lock the door, the vault is meaningless.

“People Will Eventually Learn to Distinguish Truth from Noise”

Rebuttal: Cognitive science shows the opposite. The Illusory Truth Effect (Hasher, Goldstein, & Toppino, 1977) demonstrates that repeated exposure to a statement---even if false---increases perceived truthfulness.

In the age of algorithmic repetition, falsehoods become felt truths. Truth becomes a burden. Noise becomes comfort.

“Technology Will Eventually Solve This”

Rebuttal: Technology has never solved narrative distortion. It only accelerates it.

- Radio amplified propaganda in the 1930s.

- Television amplified spectacle in the 1960s.

- Social media amplifies outrage today.

The tools change. The human impulse to distort truth remains constant.

Future Implications: A World Without Truth

Scenario 1: The Narrative Singularity (2040)

By mid-century, AI-generated narratives will be indistinguishable from human speech. Deepfakes will be ubiquitous. Legal systems will collapse under “he said, she said” chaos.

- Truth courts emerge: tribunals to adjudicate “factual validity.” But who judges the judges?

- Narrative licenses: Citizens must be certified to “speak truth” under state-regulated epistemic frameworks.

Scenario 2: The Great Forgetting

As truth becomes too dangerous to believe, society retreats into narrative nihilism.

- People stop believing in anything.

- Institutions become performative: they no longer claim truth, only “authenticity.”

- Religion, conspiracy theories, and tribal identity replace evidence-based reasoning.

This is not dystopia. It is evolution. Humans have always preferred comforting lies to painful truths.

The Luddite Path: Resistance Without Rejection

Embracing Analog as Armor

The Luddite does not reject technology. They reject the surrender of judgment to it.

- Analog records: Handwritten journals, physical archives, paper ledgers.

- Decentralized verification: Community-based fact-checking circles.

- Narrative hygiene: Training in source evaluation, cognitive bias recognition.

The Art of Slow Truth

- Slow Journalism: Investigative reporting that takes months, not minutes.

- Truth Audits: Independent reviews of institutional narratives---not just data, but framing.

- Narrative Transparency: Organizations must disclose not only what they know, but how they frame it.

A Call for Epistemic Humility

We must accept:

Truth is not a product to be delivered. It is a practice to be cultivated.

It requires patience, skepticism, and the courage to sit with uncertainty.

Conclusion: The Sapling Must Be Planted in Sunlight

Information will always leak. That is not a failure of systems---it is the nature of reality.

But truth does not die because it is exposed. It dies because we refuse to tend the soil in which it grows.

The Luddite’s warning is not against technology. It is against our willingness to let narrative replace truth.

We must stop building better vaults. We must start planting gardens.

Not to contain the truth---but to let it breathe.

Appendices

Appendix A: Glossary

- Narrative Entropy: The tendency for information to leak from containment systems and be distorted by competing human narratives, rendering truth unrecognizable.

- Epistemic Erosion: The gradual collapse of shared, evidence-based understanding in a society due to narrative saturation and distrust.

- Cognitive Dissonance: Psychological discomfort caused by holding conflicting beliefs, often resolved through narrative distortion.

- Illusory Truth Effect: The phenomenon where repeated exposure to a statement increases its perceived truthfulness, regardless of accuracy.

- Luddite: A skeptic of technological progress who prioritizes human judgment over system automation; not anti-technology, but anti-surrender.

- Deepfake: AI-generated synthetic media designed to deceive by mimicking real people or events.

- Narrative Transparency: The practice of disclosing not just facts, but the framing, motives, and biases behind their presentation.

- Information Entropy: A measure of uncertainty in information systems (Shannon entropy).

- Whistleblower: An individual who exposes wrongdoing within an organization, often at personal risk.

- Filter Bubble: A state of intellectual isolation caused by personalized algorithms that show only information reinforcing one’s existing beliefs.

Appendix B: Methodology Details

This document employs a qualitative-interpretive methodology, drawing from:

- Historical case studies (Pentagon Papers, Snowden, Venona)

- Cognitive psychology literature (Festinger, Ekman, Hasher)

- Information theory (Shannon, Floridi)

- Media studies (Bernays, Postman, Zuboff)

Primary sources were analyzed for patterns of narrative distortion. Secondary literature was selected based on peer-reviewed credibility and historical impact.

No quantitative modeling or statistical analysis was performed, as the subject matter is inherently interpretive and narrative-driven.

Appendix C: Mathematical Derivations

Shannon Entropy in Narrative Contexts

Let be a truth statement, and be competing narratives about . Each narrative has a probability of being believed: .

The narrative entropy of truth is:

As (more narratives), . Truth becomes statistically indeterminate.

Narrative Decay Rate

Let be the initial truth value (1 = pure, 0 = false). Let be the decay of truth over time due to narrative interference:

Where:

- = narrative interference coefficient

- = narrative density at time t

Solution:

This confirms: Truth decays exponentially under narrative pressure.

Appendix D: References / Bibliography

- Shannon, C.E. (1948). A Mathematical Theory of Communication. Bell System Technical Journal.

- Ekman, P. (1993). New Basics of Facial Expression. In The Nature of Emotion.

- Festinger, L. (1957). A Theory of Cognitive Dissonance. Stanford University Press.

- Hasher, L., Goldstein, D., & Toppino, T. (1977). Frequency and the conference of referential validity. Journal of Verbal Learning and Verbal Behavior.

- Bernays, E.L. (1928). Propaganda. Horace Liveright.

- Zuboff, S. (2019). The Age of Surveillance Capitalism. PublicAffairs.

- Postman, N. (1985). Amusing Ourselves to Death. Penguin Books.

- Snowden, E. (2019). Permanent Record. Metropolitan Books.

- Floridi, L. (2013). The Ethics of Information. Oxford University Press.

- Kahneman, D. (2011). Thinking, Fast and Slow. Farrar, Straus and Giroux.

- Rovelli, C. (2018). The Order of Time. Riverhead Books.

- Mayer-Schönberger, V., & Cukier, K. (2013). Big Data: A Revolution That Will Transform How We Live, Work, and Think. Houghton Mifflin Harcourt.

- Tufekci, Z. (2017). Twitter and Tear Gas: The Power and Fragility of Networked Protest. Yale University Press.

- Sontag, S. (2003). Regarding the Pain of Others. Farrar, Straus and Giroux.

- Harari, Y.N. (2018). 21 Lessons for the 21st Century. Spiegel & Grau.

Appendix E: Comparative Analysis

| Era | Information Control Method | Leak Vector | Narrative Response |

|---|---|---|---|

| Ancient Rome | Censorship, burning of texts | Scribes, oral transmission | Rewriting history |

| 18th Century | Print censorship, licensing | Underground pamphlets | Moral panic, state propaganda |

| 1940s--60s | Radio/TV control | Whistleblowers, leaks | Public relations, image management |

| 1980s--2000s | Corporate secrecy, NDAs | Leaks to journalists | Legal threats, smear campaigns |

| 2010s--Present | Digital encryption, AI surveillance | Insiders, hackers, whistleblowers | Algorithmic distortion, deepfakes, “both sides” framing |

| Future (2040) | AI-generated narratives | Synthetic media, neural interfaces | Epistemic collapse, truth courts |

Appendix F: FAQs

Q: Isn’t this just conspiracy thinking?

A: No. This is not about hidden cabals. It’s about systemic, predictable human behavior---repeated across centuries and cultures.

Q: Can’t we just use AI to detect truth?

A: AI detects patterns, not truth. It amplifies bias. If trained on biased data, it becomes a better liar.

Q: What’s the alternative to digital systems?

A: Human-centered verification. Community archives, analog records, slow journalism, narrative audits.

Q: Isn’t this pessimistic?

A: It is realistic. Pessimism is not defeatism---it’s the refusal to be deceived by false optimism.

Q: Does this mean we should stop using technology?

A: No. We must use it with eyes open. Technology is a mirror. It reflects our intentions.

Appendix G: Risk Register

| Risk | Likelihood | Impact | Mitigation Strategy |

|---|---|---|---|

| Narrative saturation overwhelms truth | Very High | Catastrophic | Promote narrative hygiene education |

| AI-generated deepfakes erode trust in media | High | Catastrophic | Mandate watermarking, source provenance |

| Institutional transparency as theater | High | Severe | Independent narrative audits |

| Cognitive dissonance leading to denialism | Very High | Severe | Public education on cognitive biases |

| Epistemic erosion leading to societal fragmentation | Medium-High | Existential | Community truth circles, analog record-keeping |

| Whistleblowers criminalized instead of protected | High | Severe | Legal reforms, whistleblower protections |

| Algorithmic amplification of outrage over truth | Very High | Severe | Regulatory limits on engagement-based algorithms |

| Loss of historical record due to digital decay | Medium | High | Analog backups, blockchain-based archives |

“The truth does not need to be hidden. It needs to be heard.”

--- But only if we are willing to listen, even when it hurts.