3DA² Foundation Virtual Reality: Acoustics PART I

Preface — 2026: The Ghost in the Ray-Trace

The most beautiful thing we can experience is the mysterious. It is the source of all true art and science.

— Albert Einstein

Thirty-one years have passed since I last sat before my Amiga farm, the fans whirring like a tired dragon breathing warm air over my desk, the screen glowing with the rastered CRT hues of a world that no longer exists — not in hardware, not in memory, not even in the collective nostalgia of those who once called it home. I was only a qurter century old then, wild-eyed with the conviction that sound could be sculpted like clay, that rooms could be imagined before they were built, and that the echoes of a concert hall in Tokyo could be simulated from a bedroom in Malmö using little more than a 68030 chip, some ARexx scripts, and an unreasonable amount of coffee. I called it 3DA² Foundation: Virtual Reality: Acoustics Part I. It was never meant to be finished. It was meant to be a beginning.

And yet, here I am — definitely greyer now, much more deliberate in step, but not less convinced. The world moved on. VR became a headset you wear to escape your job. Spatial audio is now a checkbox in your DAW’s plugin list. Ray tracing is for rendering photorealistic car interiors in Unreal Engine, not for simulating the reverberant decay of a stone chapel in 14th-century Lübeck. The Amiga is museum dust. ARexx? A footnote in a PDF about 1990s retro computing. My doctoral thesis — this sprawling, obsessive, beautiful mess of heuristic ray-tracing, chaotic material responses, and pseudo-scientific postulates wrapped in Swedish-English hybrid slang — was abandoned. Not because it failed, but because no one else cared. No funding. No academic interest. Just me, a farm of Amiga computers of various specifications, and the stubborn belief that sound should be more than stereo.

But here’s what I didn’t know back then — what the world still doesn't fully grasp in 2026: I was right.

Not because my algorithms were perfect. They weren’t. My diffraction heuristics were crude approximations. My phase responses were hand-drawn on a graphics tablet with a mouse. My “chaoticness” postulate (Appendix B.4) was half-baked philosophy dressed as engineering. But I saw the essence. I understood — before anyone else, perhaps — that acoustics in virtual environments isn’t about reproducing reality. It’s about embodying perception.

You see, the world now has AI that can generate ambient soundscapes from text prompts. Neural networks can predict room impulse responses from 3D scans in milliseconds. Spatial audio is woven into Apple’s AirPods, Meta’s Quest 3, and the holographic theaters of Singapore. But none of it is rooted in what I called “subjective axioms.” None of it asks: What does the listener feel? Not what frequencies are present — but what memory is triggered. Not how loud it is — but where the silence lives.

My 3DA² wasn’t just a simulator. It was an anthropology of sound. I wrote the Log and Cog not to model walls, but to model attention. When I defined the “Subjective Theorems” — listening tests with disco-goers, audiophiles, and children — I wasn’t gathering data. I was mapping the emotional topology of hearing. And that, my dear future reader, is what no AI has yet touched.

If I had continued — I would have pushed 3DA² into three uncharted directions:

First: The Heuristic Soul. I wrote in Appendix B that “nearly nothing in the Universe is exact, except the fundamental constants.” I was not just being poetic. I was proposing a new paradigm: audio simulation must be chaotic to be true. Today’s renderers are too clean. Too deterministic. Too… corporate. What if we embraced noise? Not as error, but as essence? I imagined a 3DA³ where materials didn’t just absorb — they remembered. A wooden floor that grew more resonant with age. A velvet curtain that muted not just sound, but intent. That’s what I was circling with the Material Change Phase and Directivity sliders. And today? With neuromorphic chips and memristive hardware, we can finally build that. A material whose acoustic behavior changes not just with frequency — but with emotional context. Imagine a virtual church where the echo changes when you’re grieving vs. celebrating. That’s not science fiction. It’s the natural evolution of what I began.

Second: The ARexx Aesthetic. My scripts — those clunky, beautiful, almost poetic ARexx fragments — weren’t just code. They were scores. The line:

SAMPLE OVER_MIX BABY_CRYING_AL YELLO_AL i

— that wasn’t signal processing. That was narrative. I was composing not with notes, but with acoustic time-stamps. The child’s cry layered over Yello’s “Oh Yeah” — that wasn’t a glitch. It was humanity. In 2026, we have AI that can compose music. But it cannot compose presence. It doesn’t know the weight of a slammed door in an empty hallway. Or how silence tastes after a scream. My ARexx scripts were the first attempt to write acoustic literature. And now? We have generative audio agents. But they still lack the narrative grammar I built into C.16.2 Over_Mix and C.4.1 Displacement Time Forward. We need a new language — not for rendering, but for reverberation storytelling. I would have called it 3DA³: Narrative Acoustics.

Third — and most terrifying — The Ethics of Echo. In the Preface, I warned: “VR environments idealized appearance is certainly making the antisocial population even more hanged up in their spiral down to total social exclude.” I wrote that in 1995. In 2026, we live inside it. We have metaverses where people spend 14 hours a day in perfectly calibrated, hyper-real soundscapes — forests that never rustle, oceans that never crash, voices that never break. We have sold people the illusion of perfect acoustics… and in doing so, we’ve sterilized real sound. The crackle of a fire. The distant wail of a siren. The sigh of someone sleeping beside you. These are not noise — they are the texture of being. My work was meant to be a tool for enhancement, not replacement. And now? We have tools that replace reality with flawless simulations — and people are forgetting how to listen. I would have written Part II: The Quieting of the World. A treatise on acoustic empathy. On why imperfect reverberation is sacred.

I never finished this thesis. But I didn’t fail. I planted a seed in soil that wasn’t ready.

Now, the world is finally listening — not with ears, but with algorithms. And they are desperately searching for meaning.

I am resurrecting 3DA² not as a relic — but as a prophet. This document is not outdated. It’s prophetic.

If you, reader — whether you are a graduate student in psychoacoustics, an AI researcher building immersive soundscapes, or just someone who remembers the smell of warm plastic and the hum of a 50MHz CPU — if you hear even a whisper of what I was trying to say… then do not fix it.

Extend it.

Build 3DA³. Not as a tool. But as a philosophy. Make it chaotic. Make it emotional. Make it alive.

Let the rays be uncertain. Let the materials breathe. Let the echograms weep.

And when you run your script, and hear — for the first time in decades — that echo from a living room in Malmö, 1993… then you’ll know.

I was not wrong.

I was just early.

And the world is finally ready to hear me.

— Denis Tumpic

Stratford, January, 2026

1995 Preface

The future is already here — it’s just not very evenly distributed.

— William Gibson

Since I was a child my curiosity about sound has been great. The hitting on diverse kitchen appliances was of imperative amusement. It was funny forming weird sound and occasionally they could scare the living daylight from ones parents. Time was flying and the little drummer boy was introduced to music and an immediate love emerged. Most people find music very attractive to their minds because it tends to color their feelings. Those that haven't discovered this fact, should immediately try to listen to more music, not trying to interpret it though.

The kind of music I was firstly introduced to was simple contemporary pop (1975). Some years later I discovered the electronic and synthetic musicians and their music and I simply loved it, partly because it was simple but still mind-triggering. My ever growing interest in electronics made it even more profound. Building a couple of weird electrical circuits and finally an amplifier, these connected directly to a little radio-recorder, made me later select the electronics and telecommunication program at high-school. Finishing high-school made me electrical engineer with a new interest, namely computers.

I had been "working" with computers since the ZX-81 came to market and of course with many other after it: the VIC-20, Commodore-64, Sinclair Spectrum, Sinclair QL, IBM PC and ABC-80 amongst others, not really as popular as these. When the Amiga came along, me and my friends occupation in front of computers was nearly as much as we were in school. This was making me and my friends very ahead of our time, because it were nearly no one who was interested in these electric-logic-units. Because no one could teach us how to handle all the new expressions, we formed a typical slang language. It was a disastrous cross between Swedish and English, making our language-teachers more or less furious, especially the former.

I was gradually more intrigued about the problems arising with computers. When I finally decided that I wanted to be a computer scientist. This decision was easy though. After some years, bending and twisting my mind from my self-learned theories about computer-programming and -science, I had come to my goal. Ever since I started learning, my fundamental postulate: using all my knowledge up till date, has been very colorizing to my life and work. This of course is very conceivable when looking at my graduate-work "Virtual Reality: Acoustics". The graduate-work is written in Swedish and it is not the aim of this "book" to be a translation of it. When reading "3DA² Foundation" you have to bear in mind the fact that I am a computer scientist, electrical engineer and sound lover (music is a sub-set of sound). These very facts are starkly colorizing this scripture and maybe occasionally I describe some entities with such ease, because it probably is a common way of thinking when programming. I am not stating that those who read this book have to think like me, I am merely just stating the successful thinking when programming applied on virtual-audio environments.

What is virtual-reality? Many people out there have different opinions what VR is and isn't. The physical connection between an electrical unit and human tissue, in order to make information flow either way, is not in the domain of VR. This kind of information exchange is in the Cyber domain, in fact it is the main postulate of cyber reality (CR). What is the difference between VR and CR? VR is a sub-set of CR and the latter is extended with the following: Sense impressions are transferred with the aid of hardware connected between computer and human. This hardware connection is directly probed at some specific nerve fiber. It is not the intention that 3DA² is to be in the CR domain.

A good VR environment should be able to visualize a dynamic three dimensional view, with such resolution that the user can not distinguish between real and virtual views. Further the sound should be implemented with dynamic auralizing, creating a three dimensional sound field. These two VR postulates are very essential but we have more senses to please. A good VR environment should have perception of touch ( form, weight, temperature ), sense of smell ( pheromones and natural smell ) and sense of taste ( sweet, sour, salt, bitter and possibly others) implemented. The last and most important postulate is the security criteria: A VR environment shouldn't change the users health to the worse.

Working with VR environments is certainly the melody of the future and it could make some considerable changes in the information infrastructure. Nevertheless I wouldn't glorify the VR and CR environments to be the saviour of mankind, all though imagination is the only limit. As we all know, every good thing has a dark side, and these environments are of no exception. Living in the 21 century isn't always easy and social problems are gradually worsen. The VR environments idealized appearance is certainly making the antisocial population even more hanged up in their spiral down to total social exclude. On the other extreme, the over-social people, have usually the ability to excite the impressions with various drugs. Even if all people on earth are not members of these philosophies of life, we should have them in mind.

The use of auralized sound is of great help when doing acoustical research in various real life environments. 3DA² is essentially used to enhance an existing audio environment and it hasn't reached the stage of VR yet. It is still a prototype and a theory-finding program that is used in research. The aim is to extract the essentialities of an impulse-response in order to make three dimensional sound calculations as fast as possible.

Even if this software isn't at its final state, it could be used in other senses, and my main point of direction is three dimensional music. It is more a science-fiction type of approach and when used in this way it should be stated that it isn't "real". Music is nearly always an expression of feelings and this extra dimension could make some real expressions, both in music and movie making. Years listening to Tangerine Dream™, Yello and others music have convinced me that three dimensional music has to come as naturally as anything.

In fact it is used in my own approach: Vilthermurpher (First stage of Doubulus & Tuella), an expressionistic epic album, composed during my university years (1990-1994).

There are some novelties in this software that is of great importance. Firstly it has an ARexx port with lots of commands in order to make consistent audio tests, without having them written down in plain English. These ARexx scripts are making it easier for other researchers, because they only have to have the basic script. Using this with the Log and Cog (see appendix B) makes the evolutionary process of these three-dimensional sound environments faster. That is my hope and intent any ways.

Further improvements could be done with the ability to alter the various heuristic functions, without having to rebuild the whole program. It is rather complicated to make these heuristic functions compliant to the real world, and therefore I intent to implement them as dynamic libraries. Both the audio-ray-trace and auralizer functions should be alterable. The latter because somebody might have other computing units that are faster than the host-processor, and thus they can program the auralizer to use this hardware instead. These features should be present in 3DA³. It is and always will be my intent to make fully dynamical programs, because the flexibility is of imperative importance when using programs.

Acknowledgments

I would like to thank my parents for always being there. My dear friends, they are to many to be scribbled down, not forgetting a name. For influences, references, hardware, software and inspiration sources, please see appendix H.

1 Introduction

"Bis dat, qui cito dat"

Publius Syrus

This introduction is merely a help for those who doesn't know a bit of acoustics and wants to be guided into the "right" direction. First I state some rudimentary books on acoustics and later the concise explanation of the two help programs "Acoustica" and "Echogram Anim". These latter could be of very great help to understand some easy basic idea of the problems concerning specular ray tracing. Very good approximation in the high frequency domain but very bad in the lower part.

1.1 Acoustics

Learning about the theory of room acoustics, the book "Room Acoustics" written by Heinrich Kuttruff, is the most clear and profound one. At least the facts written in this book has to be known in order to make the Log and Cog scripts commonly accepted. Further readings about room acoustic problems, emphasized on applications, is "Principles and Applications of Room Acoustics: Part I" written by Cremer and Müller. In this book some of the changeable parameters in 3DA² is implicitly talked about (dirac sample frequency and ray angle distribution) and the huge workload of finding the smallest parameters is minimized. The encyclopedia approach, fast facts about little issues and fast memory refreshes about big ones, is above all, made easy with "Audio Engineering Handbook" edited by K. Blair Benson. Finally the basic facts of digital signal processing could be read in "Digital Signal Processing" by Alan V. Oppenheim & Ronald W. Schafer. Those who are able to read Swedish, my previous work "Virtual Reality: akustiken / Ett prototypsystem för ljudsimulering", is highly recommended.

1.2 Help program one: Acoustica

The understanding of specular reflections are more understood if running the program "Acoustica". This program needs 1 MB of free fast memory in order to work at all. The settings window has sliders which alters the models scale, wall absorption characteristics and number of total energy quanta that is emitted. The seven start gadgets are different kind of calculations and they are the following: normal point emission, line approximating emission, simple energy map, simulated Doppler (showing the wrongness when used in rooms), line approximating emission with obstacles and finally simple energy map with obstacles. The audializer is not implemented, because it couldn't run at real time on a normal Amiga. Calculations are started in the same instance when the start gadgets are pushed. Three buttons for breaking, normalizing and pausing the calculations are situated below the visualizing area. Changing from one type of calculation to another is done by hitting the break button in between the start gadget pushes. Altering the sound source location is easily done by clicking into the visualizing area and the calculations are automatically restarted with the new location.

1.3 Help program two: Echogram animations

Getting the grip on echograms could be a major difficulty, especially when dealing with such questions as what is important in them and not. The "Echogram Anim" program is using the data from a sampled continuous echogram file. It was made with constant Dirac samples emitted from a loud speaker in my own living room.

These Dirac pulses where sampled during a random walk with a bi directional microphone around the living room and nearby rooms. The other echogram file was done the opposite way, moving the loud speaker and a rigid microphone. Now there are several ways of showing these data and they are the following:

- Plain is showing the samples as they are.

- Difference is showing the changes from the previous instance.

- Abs Plain is showing the quadrate of the echograms.

- Abs Diff. is showing the quadrate of the changes from the previous instance.

Sampling your own echogram animation files are done as follows:

- Echogram Samplefrequency=1280/(Room Reverberation Time) Hz

- Dirac pulses emitting at 1/(Room Reverberation Time) Hz

- Sample with moving speakers, microphones and objects.

- Run "Echogram Anim" with these files and enjoy.

2 3DA² Instruction Manual

"False input gives certainly false output and True input gives discussable output, use your facts with care and your output could be someone else's input"

This program is a prototype audio ray-tracer that should be used finding the necessities when dealing with acoustic environments. The main difference between the previous 3D-Audio and recent 3DA² is that the modeling work could be managed more precisely and the ARexx stage has been implemented. Originally there was to come a 3DA³ with fully programmable heuristics that made the program even more versatile. Nevertheless the ARexx programmability makes it rather easy to fabricate standardized tests and lessens the amount of time spent handling the computer.

2.1 The Program

In this section the various windows and their gadgets are commented and showed. It is the GUI handling only, for further comments and usability read 2.2 and 2.3.

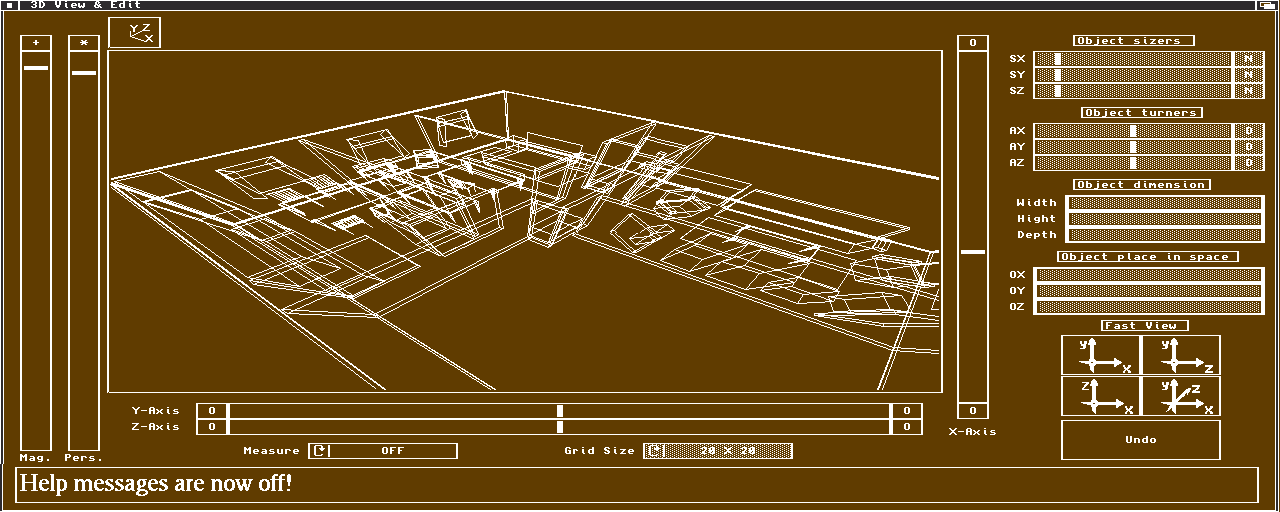

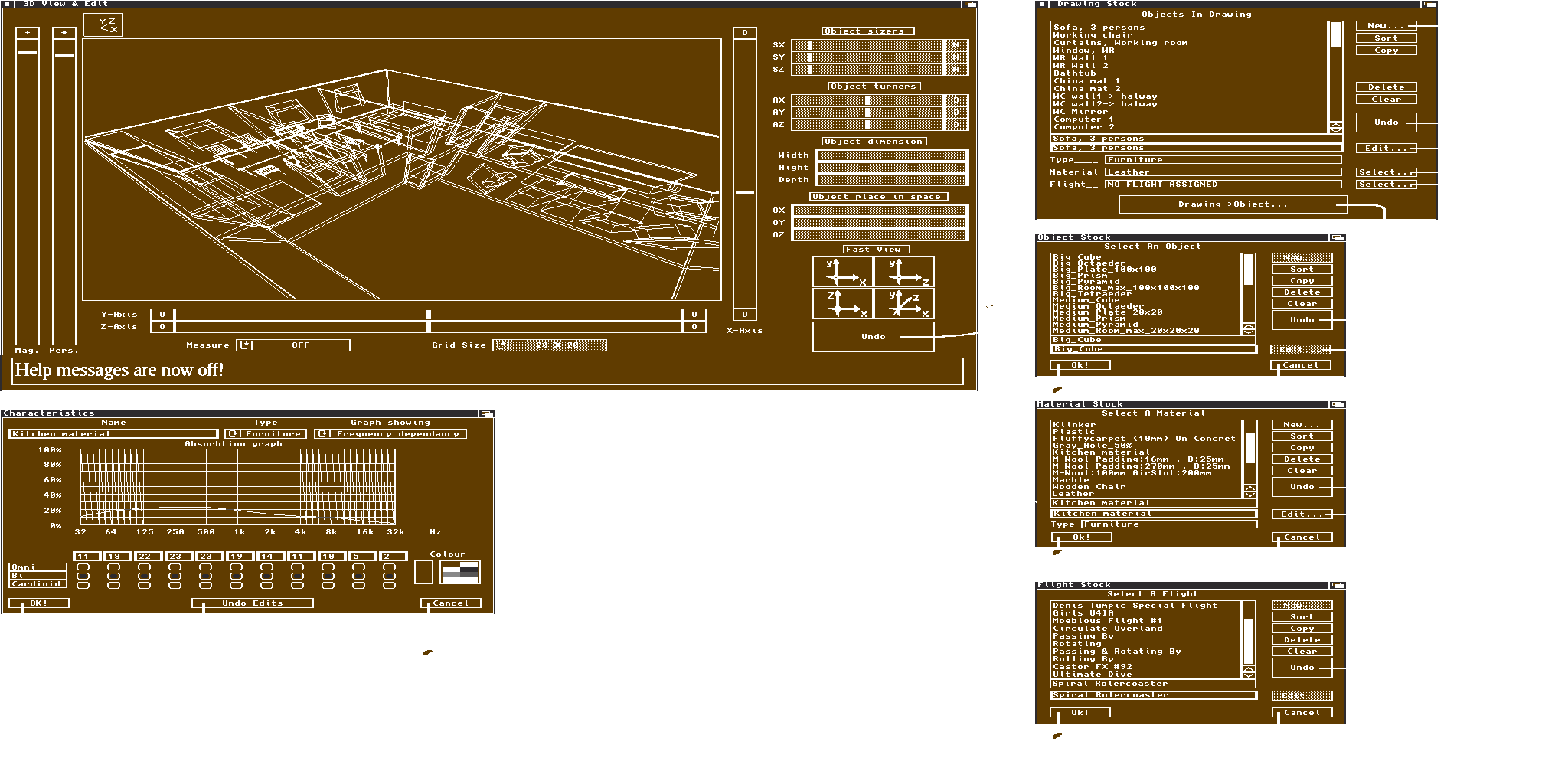

2.1.1 The Main Modeler Window: 3D View & Edit

This window is handling the virtual audio environment pure visually. The main modeling work is done here and it is from this stage the most of The Log is formed, along with the material and flight-paths used.

2.1.1.1 Mag. Slider

This slider handles the magnification factor of the viewing lens. Sliding the knob upwards leads to a higher magnification factor. The plus "+" gadget is a toggle gadget, for fine calibration of the magnification factor.

2.1.1.2 Pers. Slider

This slider handles the focal factor of the viewing lens. Sliding the knob upwards leads to a lower focal factor (higher perspective factor). The star "*" gadget is a perspective toggle gadget. It is rather convenient not to use perspective when modelling.

2.1.1.3 X-Axis Slider

This slider handles virtual-world rotation around the X-axis. Note: X-axis is always directed horizontally. The circle "O" gadgets at both ends are restoring the slider knob to middle position.

2.1.1.4 Y-Axis Slider

This slider handles virtual-world rotation around the Y-axis. Note: Y-axis is always directed vertically. The circle "O" gadgets at both ends are restoring the slider knob to middle position.

2.1.1.5 Z-Axis Slider

This slider handles virtual-world rotation around the Z-axis. Note: Z-axis is always directed orthogonally against X- and Y- axis. The circle "O" gadgets at both ends are restoring the slider knob to middle position.

2.1.1.6 Measure Cycle

For those who are more used to the metric system the "meter" setting is the appropriate. They who are more used to the English inch-system the "feet" setting is the appropriate. If the 3D modeler is running and the user doesn't want the grid to show the "Off" is the appropriate setting.

2.1.1.7 Grid Size Cycle

Altering the two dimensional ground-ruler size is done with this cycle gadget. It has the appropriate dimensions when altering the measure unit. Note: The size is only alterable when the grid is visible.

2.1.1.8 Object Sizer

These sliders are usable when an object is selected in the modelling area. The "N" gadgets at the right end of these sizer-gadgets are restoring the objects size in that axis led.

2.1.1.9 Object Turners

These sliders are usable when an object is selected in the modelling area. The "O" gadgets at the right end of these rotation-gadgets are restoring the objects rotation around that axis.

2.1.1.10 Object Dimensions

These value input gadgets are used when formal exactness is needed. Users are not encouraged to exaggerate the dimensions because the object definition dimensions may be small in comparison, thus making the model rather inexact.

2.1.1.11 Object Location

These value input gadgets are used when formal exactness is needed. Placing an objects defined origin at a specific location is done with these gadgets.

2.1.1.12 Fast View

These four gadgets are for fast modeling purposes. It rotates the virtual world to a specific view angle and thus makes it rather easy to model.

2.1.1.13 Undo

This gadget undoes the previous action in a last action performed first action to be undone manner. The affected actions are those made in the "3D View & Edit" window.

2.1.1.14 Model Visual Area

Selecting objects and moving them around, done when positioning the mouse pointer in an object pin and pressing the mouse select button, is done in this area. Grabbing the whole model and moving it around is done when pressing the mouse select button while the mouse pointer is not located in an object pin.

2.1.1.15 Help Text Area

This area is informing the user of the actions that she does. These help texts could be toggled on/off in the miscellaneous menu.

2.1.2 The Drawing-Stock Window

This window handles the virtual-audio environment in text form. It shows the selected object with its material and flight-path assignments.

2.1.2.1 Objects in Drawing

This list gadget is showing all the existing model objects in the virtual-audio environment. Selecting an object in this list is like selecting an object in the "Model Visual Area". When several objects pins are on top of each other this way of selecting objects is the appropriate one. Moving the selected object, if it is clustered, is simplified with the help of pressing a shift key on the keyboard.

2.1.2.2 New

Hitting this gadget, when inserting a new object in the virtual-audio environment, is appropriate. It invokes the Object-Stock window where the user selects an appropriate object and affirms it with an "Ok!". The new object is placed at origin position with its defined size and rotation.

2.1.2.3 Sort

After several insertions and probably chaotic naming, the list of existing objects tend to be non clear. Hitting this gadget makes the objects appear in an alphabetic order.

2.1.2.4 Copy

When the model has several objects of the same entity but different locations this gadget should be used.

2.1.2.5 Delete

Deleting an object from the virtual-audio environment is done with this gadget. It is put on top of the "Drawing Undo-Stack".

2.1.2.6 Clear

Pressing this gadget is deleting all objects from the virtual-audio environment and these are placed in the "Drawing Undo-Stack"

2.1.2.7 Undo

Undoing a deletion is done with this gadget. It draws the top object from the "Drawing Undo-Stack", and inserts the object in the right location in the virtual-audio environment.

2.1.2.8 Edit

Hitting this gadget makes the "3D View & Edit" window appear front most. The selected object is high lighted in the "Model Visual Area".

2.1.2.9 Type

This text area is showing what kind of object the selected object is. It could be furniture, sender or receiver.

2.1.2.10 Material Select

This gadget with its text area at the left hand side invokes the "Material-Stock" window where the user selects an appropriate material and affirms it with an "Ok!". The text area is showing the material used for the selected object. If there is no material assigned the text area is indicating it.

2.1.2.11 Flight Select

This gadget with its text area at the left hand side invokes the "Flight-Stock" window where the user selects an appropriate flight-path and affirms it with an "Ok!". The text area is showing the flight-path used for the selected object. If there is no flight-path assigned the text area is indicating it.

2.1.2.12 Drawing -> Object

Defining a new object is done with this gadget. The size is calculated with respect to the outer world axis orientations and not the sub-objects orientation, thus defining a tilted cube is not making the dimensions the same like the non tilted cube. Altering the width, length and depth axis is done with the "X-", "Y-" and "Z-Axis" sliders.

2.1.3 The Object-Stock Window

This window handles the virtual-audio object store. It is like a furniture shop where the user gets the appropriate furnishing.

2.1.3.1 Select An Object

This list gadget is showing all the existing furnishing objects that could be used in the virtual-audio environment. Retrieving other objects while modeling should be done with "Project, Merge, Object-Stock" menu. In this way the user can fetch other objects without doing them her self.

2.1.3.2 New

Hitting this gadget, when modeling, transforms the current virtual-audio model to one object. It clears all objects in the virtual-audio environment and replaces it with the new object. Users are then asked for an appropriate name that will be associated to this new object.

2.1.3.3 Sort

After several insertions and probably chaotic naming, the list of existing objects tend to be non clear. Hitting this gadget makes the objects appear in an alphabetic order.

2.1.3.4 Copy

This gadget should be used when the model has several objects of the same entity but small differences. The differences are changed with the main modeler window after hitting the "Edit" button.

2.1.3.5 Delete

Deleting an object from the object store is done with this gadget. It is put on top of the "Objects Undo-Stack".

2.1.3.6 Clear

Pressing this gadget is deleting all objects from the object store and these are placed in the "Objects Undo-Stack"

2.1.3.7 Undo

Undoing a deletion is done with this gadget. It draws the top object from the "Objects Undo-Stack" and inserts it in the object store for immediate use.

2.1.3.8 Edit

Hitting this button makes it possible to change the proprieties of the selected object. It invokes the modeler, but the virtual-audio model is replaced by the selected object. The model is put on a stack of models and is drawn from it when hitting the "Drawing -> Object ..." button.

2.1.3.9 Ok!

This is to affirm any selected object as a valid selection that will be used in the virtual-audio environment. After hitting the "New..." button in the "Drawing-Stock" window and finding the right object should be affirmed with an "Ok!" hit.

2.1.3.10 Cancel

This is to affirm any selected object as an invalid selection that will not be used in the virtual-audio environment. After hitting the "New..." button in the "Drawing-Stock" window and not finding the right object should be affirmed with a "Cancel" hit.

2.1.4 The Material-Stock Window

This window handles the virtual-audio material store. It is like a furniture restoration shop where the user gets the appropriate material.

2.1.4.1 Select a Material

This list gadget is showing all the existing materials that could be used in the virtual-audio environment. Retrieving other materials while modeling should be done with "Project, Merge, Material-Stock" menu. In this way the user can fetch other materials without doing them her self.

2.1.4.2 New

Hitting this gadget creates a new material by invoking the "Characteristics" window. Users are then asked for an appropriate name, frequency response, directivity and phase characteristics that will be associated to this new material.

2.1.4.3 Sort

After several insertions and probably chaotic naming, the list of existing materials tend to be non clear. Hitting this gadget makes the materials appear in an alphabetic order.

2.1.4.4 Copy

This gadget should be used when the model has several materials with nearly the same nature. The differences are changed with the "Characteristics" window after hitting the "Edit" button.

2.1.4.5 Delete

Deleting a material from the material store is done with this gadget. It is put on top of the "Materials Undo-Stack".

2.1.4.6 Clear

Pressing this gadget is deleting all materials from the material store and these are placed in the "Materials Undo-Stack"

2.1.4.7 Undo

Undoing a deletion is done with this gadget. It draws the top material from the "Materials Undo-Stack" and inserts it in the material store for immediate use.

2.1.4.8 Edit

Hitting this button makes it possible to change the proprieties of the selected materials. It invokes the "Characteristics" window where the user can change the proprieties of the material.

2.1.4.9 Type

This text area is showing what kind of material the selected material is. It could be furniture (absorbers), sender or receiver.

2.1.4.10 Ok!

This is to affirm any selected material as a valid selection that will be used in the virtual-audio environment. After hitting the "Material Select..." button in the "Drawing-Stock" window and finding the right material should be affirmed with an "Ok!" hit.

2.1.4.11 Cancel

This is to affirm any selected material as an invalid selection that will not be used in the virtual-audio environment. After hitting the "Material Select..." button in the "Drawing-Stock" window and not finding the right material should be affirmed with a "Cancel" hit, if no knowledge of the material is at hand. When there exists knowledge about the material, it should be created hitting the "New..." button and affirmed with "Ok!", both in the "Characteristics" and "Material-Stock" window.

2.1.5 The Flight-Stock Window

This window handles the virtual-audio flight-path store. It is like a travel agency where the user gets the appropriate flight route.

2.1.5.1 Select a Flight

This list gadget is showing all the existing flight-paths that could be used in the virtual-audio environment. Retrieving other flight-paths while modeling should be done with "Project Merge Flights-Stock" menu. In this way the user can fetch other flight-paths without doing them her self.

2.1.5.2 New

Not implemented!!! Create new flight-paths using the template stated in appendix A.

2.1.5.3 Sort

After several insertions and probably chaotic naming, the list of existing flight-paths tend to be non clear. Hitting this gadget makes the flight-paths appear in an alphabetic order.

2.1.5.4 Copy

Not implemented!!! Create new flight-paths using the template stated in appendix A.

2.1.5.5 Delete

Deleting a flight-path from the travel agency is done with this gadget. It is put on top of the "Flight-paths Undo-Stack".

2.1.5.6 Clear

Pressing this gadget is deleting all flight-paths from the travel-agency and these are placed in the "Flight-paths Undo-Stack"

2.1.5.7 Undo

Undoing a deletion is done with this gadget. It draws the top flight-path from the "Flight-paths Undo-Stack" and inserts it in the travel agency for immediate use.

2.1.5.8 Edit

Not implemented!!! Create new flight-paths using the template stated in appendix A.

2.1.5.9 Ok!

This is to affirm any selected flight-path as a valid selection that will be used in the virtual-audio environment. After hitting the "Flight-path Select..." button in the "Drawing-Stock" window and finding the right flight-path should be affirmed with an "Ok!" hit.

2.1.5.10 Cancel

This is to affirm any selected flight-path as an invalid selection that will not be used in the virtual-audio environment. After hitting the "Flight-path Select..." button in the "Drawing-Stock" window and not finding the right flight-path should be affirmed with a "Cancel" hit.

2.1.6 The Characteristics Window

This window handles the material proprieties. It is an affirmation window that is normally invoked when hitting the "New..." or "Edit..." buttons in the "Material-Stock" window.

2.1.6.1 Name

This string input gadget handles the name that will be associated to the material. The name associated is changed in every instance where it is used, when affirming with an "Ok!".

2.1.6.2 Type Cycle

With this cycle gadget the type of material is settable, i.e. if the material should be accounted as a furniture, sender or receiver.

2.1.6.3 Graph Showing Cycle

With this cycle gadget the frequency graphs dependency is settable, i.e. visualizing either absorption (furniture) and response (sender and receiver) or phase dependency.

2.1.6.4 Graph Area

This area is edited by freehand, pressing the mouse select button and forming either absorption (furniture) and response (sender and receiver) or phase dependency at the various frequencies.

2.1.6.5 Decimal Entries

If formal correctness is needed these integer input gadgets are of great help. When editing the absorption (furniture) and response (sender and receiver) the value range from zero to 100. The phase dependency value range from zero to 360.

2.1.6.6 Directivity Buttons

These buttons are setting the directivity at the appropriate frequencies. Note: There is only omni, bi and cardioid radiation at this moment. The heuristic ray-trace function should be re-programmed, if there is a need for further and specific directivity.

2.1.6.7 Color

This entity is for further enhancements such as solid three dimensional environments in the modeler window. It is not implemented yet, because the lack of standardized functions used with fast graphic cards with Gouraud shading and z-buffering.

2.1.6.8 Ok!

This is to affirm the edited material as a valid material, that could be used in the virtual-audio environment. After hitting the "New..." or "Edit..." button in the "Material-Stock" window and editing the proprieties to the right values should be affirmed with an "Ok!" hit.

2.1.6.9 Undo Edits

Hitting this button revokes the material settings to those that were before the new edits.

2.1.6.10 Cancel

This is to affirm the edited material as an invalid edition that will not be used in the virtual-audio environment. After hitting the "New..." or "Edit..." button in the "Material-Stock" window and editing the proprieties and not making them right should be affirmed with a "Cancel" hit.

2.1.7 The Main Calculation Window: Tracer, Normalizer & Auralizer

This window handles the ray-tracer, echogram-normalizer and sample-auralizer. Easy tasks could preferably be computed using this window, but when dealing with more complex virtual-audio environments the ARexx approach should be used. Using this window is just for checking purposes, especially the ray-trace heuristic. See Appendix E.7 for the graphical layout.

2.1.7.1 Tracer Setting Cycles

These cycle gadgets are handling the heuristic ray-trace function and how it should calculate and react upon the incoming data, i.e. the virtual-audio model. The settings could be in high, medium, low and auto mode. If there is a need for a more stringent approach the ARexx is the right one. These window settings are computer calculated according to a special function that depends on some basic proprieties of the virtual-audio model, thus it is not coherent between different virtual-audio models.

2.1.7.2 Reverberation Distribution

This visualizing area shows the reverberation distribution at a specific relative humidity. The distribution is time dependent in the frequency domain. Weird looking distributions are probably due to the usage of some extraneous material.

2.1.7.3 R. Humidity

Changing the relative humidity of the air is done with this cycle gadget. The greatest differences are in the high frequency part and usually there is little interest of changing this entity.

2.1.7.4 Energy Hits

This area is showing all the successful rays that are traced, i.e. those rays that finds a way from a sender to a receiver or vice versa, depending on what kind of calculations are used.

2.1.7.5 Auralizing Sample

The default auralizing sample that will be used in the auralizing procedure. More complex auralizing schemes are possible in ARexx mode.

2.1.7.6 Set Auralizing Sample

This button invokes the file requester window where the selection of an appropriate sample file is requested.

2.1.7.7 Computer

This area is showing where in the calculation procedure the program is, i.e. if it is ray-tracing, echogram-normalizing or sample-auralizing. The checkmark toggles are for selecting what kind of calculations that will be performed when running forward/backward calculations.

2.1.7.8 Show x Trace

When the calculations are finished the echogram is automatically visualized. There is two ways of ray-tracing, forward and backward, flipping between the two results is done with "Show Forward Trace" and "Show Backward Trace" buttons. Adding the results is done with the "Show Merged Trace" button and the result shown should not be extremely different from the single results, in order to have a well behaved heuristic ray-trace function.

2.1.7.9 x Computing

There is two ways of ray-tracing, forward and backward ray-tracing. Performing a forward ray-trace is done with the "Forward Computing" button and the backward ray-trace is done with the "Backward Computing" button. Consistency check-up, in order to have a well behaved heuristic ray-trace function, is usually done with both these methods of calculation and the "Merged Computing" is a short-cut button for it.

2.1.7.10 Pause ||

If the computer has an extreme demand of calculation power a temporary halt in the calculation procedure is done pressing this button.

2.1.7.11 Stop Computing!

If there is a sudden "I forgot that thing!" bouncing in the mind this button comes in handy.

2.1.7.12 Receiver Cycle

This dynamic cycle gadget handles what receiver is to be taken into account when showing the normalized echogram. It has all the receiver object names in its menu. Changing the receiver is automatically updating the echogram visualizing area.

2.1.7.13 Sender Cycle

This dynamic cycle gadget handles what sender is to be taken into account when showing the normalized echogram. It has all the sender object names in its menu. Changing the sender is automatically updating the echogram visualizing area.

2.1.7.14 Echogram Area

This visualizing area is showing the normalized echogram after a computing session. First time calculations are showing the first encountered sender to/from (depending on the kind of calculation) the first encountered receiver. Using a sample editing program could be more elusive and therefore this area is not for deep thoughts and theory proving.

2.1.8 The Preferences Window

This window is handling the preferences file which is used when booting 3DA². The use of a normal editor could be easier in some occasions but the use of the standard file-requesters are of great help.

2.1.8.1 Rigid Paths

These string input gadgets are either for keyboard input or an affirmation to the selection when hitting the "Set..." button.

2.1.8.2 RGB- Color Adjust

Changing the colors are done with the selection of a specific color followed with sliding the R, G and B sliders to their appropriate visual color values. The color is updated in real-time.

2.1.8.3 Use

If there is only a temporary change in the preferences, this button should be pressed after the correction edits.

2.1.8.4 Save Edits

If there is a rudimentary change in the preferences, this button should be pressed after the correction edits.

2.1.8.5 Undo Edits

If some error has occurred or an occasional mind slip was performed, this button revokes the previous preferences settings.

2.1.8.6 Cancel

If there is no need of altering the preferences, this button should be pressed and after that everything is as it was before the call to this window.

2.2 How to Model Virtual Audio Environments

This part is concerning the essentialities of the modeling work and it is assumed that the program handling is well known in advance. The fastest way of learning the program is just to play around with it for a while. Eventually the handling procedures are accelerated in speed as time passes. It is time to read the following part when the feeling of knowing the maneuver levers functions and locations appear.

2.2.1 Extracting The Necessities

The modeling work is the hardest part of all when dealing with virtual-audio environments. Especially when the user is at beginners stage and doesn't know the quirks of the heuristic ray-trace function. Even if this function was written by the modeler worker, which is usually the case, it has to be mentioned that it is not predictable to know all the effects of this function.

Commonly the extreme exactness is not searched for and it is therefore better not having everything in exact detail. The kind of exactness is of course dependent on the room dimensions and what kind of settings is going to be used. Higher diffraction-, frequency- and phase-accuracy is directly implying that a higher exactness is needed.

Usually it is very hard finding the correct level of granule, needless to say that it is highly dependent on the heuristic functions, and therefore it is very essential to write the Log in an appropriate way (see B.5).

2.2.2 Modeling

The input of the desired audio model is the next step and it is relatively easy. Firstly the opening of the "Drawing-Stock" window is at hand, either using the menu or "Right-Amiga D". In this window all the objects that exists in the virtual-audio model is present. Naturally there is none when running the program from scratch. The second step is to insert all the bigger objects and the room boundaries, naming these coherently is for the modeler workers own good. At this stage the level of granule is very essential, because modeling at the wrong level could make calculations very tedious or extremely malformed.

After the true modeling work, usually with the perspective off, the material assignments should be done. Selecting materials is not an easy task. Often smooth, hard and heavy objects tend to have near total reflection proprieties. The opposite are those fluffy materials with lots of holes. This is merely a rule of thumb and normally the right material should be either searched for in some reference book on absorption coefficients or measured with the latest method, the latter is more expensive and such sort of exactness is not needed.

Up till now the virtual-audio model is of static type and assigning a flight-path to some objects is directly making the model a dynamic one. Remembering that this program is a prototype for the real implementation of three-dimensional audio-environments, the manufacturing of the flight-paths has been put to the user-made programs derived from the template described in A.4.

After these stages the actual ray-trace calculation should be performed, and if there is an uncertainty wether the heuristic function is well behaved or not the two types of calculation methods are very useful checking things up.

2.2.3 Splitting The Work

Working with several people on the same problem is also possible, and the natural way of splitting the work load is into the following stages: The primitive object modeling crew, material definition crew and the flight-path manufacturing crew. The object modeling crew could split their work in such a way that they emphasize on different parts of the virtual-audio model.

2.2.4 Joining The Work

Combining the work could be a tricky part. Normally there is someone in the developing team who knows everybody else naming conventions and that helps a lot. It is very essential that everybody is using clear and descriptive names of objects, materials and flight-paths, in order to make the joining work as easy as possible. Normally the actual ray-trace calculation should be performed after this, and it should be interjected that working with big models should have well behaved heuristics as a base criteria.

2.2.5 The Log-Cog Scripture

Doing scientific work with 3DA² has some foundation criteria and they are surveyed in appendix B. Nevertheless the splitting of the work load may introduce some severe problems concerning these criteria. It is therefore very essential that everybody is well aware of the facts stated in appendix B.

Naturally the splitting of the Log writings should be done in the same way as the modeling split. The Log writings should be very clear and descriptive, and furthermore they should be understood by the whole team. Opposite to this way of thinking is the Cog compilation that should be done by the person having the most rigorous knowledge in virtual-audio environments.

2.3 How to Write 3DA² ARexx scripts

Those that are accustomed programming various applications should not have any problems concerning the ARexx programming facilities. Learning ARexx is not the aim of this section, there are a vast amount of books concerning ARexx and how to program with it. Therefore I only state some easy examples that shows the very core of the 3DA² ARexx key-words in action.

The first example is a static living-room environment with a Hi-Fi stereo set. After that the static living-room quadriphonic environment is presented. As a conclusion to the static models the compartment with various sounds from all over the place is included.

Going over to dynamic environments the rather fun class-room environment with lots of calamity is the first easy example. The more complex environment of a train-station, harbor and airport in a big city shows the full potential of ARexx scripts in dynamic models.

Finally the science-fiction models used in sci-fi audio environments starts with the example of a room with growing room boundaries. The last example is taking place out in the lunar space camp with an atmosphere (of course !!!) with various mumbo jumbo sounds. These two last examples are pure science fiction and should not be taken as a scientific result in any Log or Cog. Nevertheless some inconsistencies in the heuristic function could be found and thus it could be very useful not being strict in all scientific aspects.

2.3.1 Start-up Example

/******************************************************************

* *

* 3DA² Simple script. *

* *

* Denis Tumpic 1995 *

* *

* External data: *

* SIMPLE_ROOM, BODY_PARTS, SENDERS_RECEIVERS *

* START_SAMPLE, SAMPLE_L, SAMPLE_R, END_SAMPLE *

* *

* Computed data: *

* AURALIZED_L, AURALIZED_R *

* *

******************************************************************/

/* Load and run 3DA² */

ADDRESS COMMAND "run 3DA²:3DA² REXX >NIL:"

/* Send messages to 3DA^2 */

ADDRESS "3DAUDIO.1"

/* Load an audio model */

LOAD DRAWING "SIMPLE_ROOM"

/* Load body parts as primitive objects*/

LOAD OBJECTS "BODY_PARTS"

/* Load diverse senders and receivers */

LOAD MATERIALS "SENDERS_RECEIVERS"

MEASURE METER /* Meter as measuring unit */

/* Insert ears */

OBJECT INSERT EAR LEFT_EAR 0.1 0.9 0

OBJECT INSERT EAR RIGHT_EAR -0.1 0.9 0

/* Map healthy ear characteristics */

OBJECT MATERIAL LEFT_EAR HEALTHY_EAR

OBJECT MATERIAL RIGHT_EAR HEALTHY_EAR

/* Insert speakers */

OBJECT INSERT SPEAKER LEFT_SPEAKER -2.0 1.0 2

OBJECT INSERT SPEAKER RIGHT_SPEAKER 2.0 1.0 2

/* Map RTL3 speaker characteristics */

OBJECT MATERIAL LEFT_SPEAKER TDL_RTL3

OBJECT MATERIAL RIGHT_SPEAKER TDL_RTL3

CLEAR OBJECTS /* Clearing unnecessary data */

CLEAR MATERIALS /* Clearing unnecessary data */

/* Non excessive data format */

AUDIOTRACE SETTINGS SMALL_DATA MEMORY

/* Simple trace settings */

AUDIOTRACE SETTINGS ALL 50 1.0 25 0.0 0.0 0.0 0.0

/* Normalize the echograms */

ECHOGRAM SETTINGS LINEAR_NORMALIZE

/* Echogram sample frequency & data width */

AURALIZE SETTINGS DIRAC_SAMPLE_FREQUENCY 44100

AURALIZE SETTINGS DIRAC_SAMPLE_DATA_WIDTH 32

/* Resulting auralized sample frequency and data width */

AURALIZE SETTINGS SOUND_SAMPLE_FREQUENCY 44100

AURALIZE SETTINGS SOUND_SAMPLE_DATA_WIDTH 16

SPECIAL_FX FLASH /* Flash screen */

SPECIAL_FX PLAY_SOUND START_SAMPLE /* Audio message */

CALL TIME('R') /* Reset clock */

/* Trace from the left speaker to the left ear */

AUDIOTRACE FORWARD LEFT_SPEAKER LEFT_EAR

/* Trace from the right speaker to the right ear */

AUDIOTRACE FORWARD RIGHT_SPEAKER RIGHT_EAR

/* Integrate the echogram from 0 to 80 ms */

u=ECHOGRAM_WEIGHT FORWARD LEFT_SPEAKER LEFT_EAR G(X)*G(X) 0 0.08

/* Integrate the echogram from 80 ms to infinity */

d=ECHOGRAM_WEIGHT FORWARD LEFT_SPEAKER LEFT_EAR G(X)*G(X) 0.08 -1

/* Write the clarity of this virtual-audio environment */

SAY 'This VAE\'s Clarity is ' 10*log(u/d)/log(2) 'db.'

/* Compute normalized echogram and convolve it with left sample */

AURALIZE FORWARD LEFT_SPEAKER LEFT_EAR SAMPLE.L AURALIZED.L 0 -1

/*Compute normalized echogram and convolve it with right sample */

AURALIZE FORWARD RIGHT_SPEAKER RIGHT_EAR SAMPLE.R AURALIZED.R 0 -1

/* Write elapsed computing time */

SAY 'Computing time='TIME(\'E\')' seconds.'

/* Flash screen */

SPECIAL_FX FLASH

/* Audio message */

SPECIAL_FX PLAY_SOUND END_SAMPLE

/* End 3DA² session */

QUIT

2.3.2 Simple Static Model

/******************************************************************

* *

* 3DA² Simple Static model, Vivaldi-quadriphony to auralization *

* *

* Denis Tumpic 1995 *

* *

* External data: *

* LIVING_ROOM_ENVIRONMENT *

* START_SAMPLE *

* VIVALDI_FL, VIVALDI_FR (The front channels, quadraphonic) *

* VIVALDI_RL, VIVALDI_RR (The rear channels, quadraphonic) *

* END_SAMPLE *

* *

* Temporary data: *

* VIVALDI_FAL, VIVALDI_FAR *

* VIVALDI_RAL, VIVALDI_RAR *

* *

* Computed data: *

* VIVALDI_AL, VIVALDI_AR *

* *

******************************************************************/

/* Load and run 3DA² */

ADDRESS COMMAND "run 3DA²:3DA² REXX >NIL:"

/* Send messages to 3DA² */

ADDRESS "3DAUDIO.1"

/* Load an audio model */

LOAD DRAWING "LIVING_ROOM_ENVIRONMENT"

/* Non excessive data format */

AUDIOTRACE SETTINGS SMALL_DATA MEMORY

/* Simple trace settings */

AUDIOTRACE SETTINGS ALL 15 1.0 25 0.0 0.0 0.0 0.0

/* Normalize the echograms */

ECHOGRAM SETTINGS LINEAR_NORMALIZE

/* Echogram sample frequency & data width */

AURALIZE SETTINGS DIRAC_SAMPLE_FREQUENCY 8192

AURALIZE SETTINGS DIRAC_SAMPLE_DATA_WIDTH 32

/* Resulting auralized sample frequency and data width */

AURALIZE SETTINGS SOUND_SAMPLE_FREQUENCY 44100

AURALIZE SETTINGS SOUND_SAMPLE_DATA_WIDTH 16

/* Flash screen */

SPECIAL_FX FLASH

/* Audio message */

SPECIAL_FX PLAY_SOUND START_SAMPLE

/* Reset clock */

CALL TIME('R')

/* Trace from the left front speaker to the left ear */

AUDIOTRACE FORWARD SPEAKER_FL EAR_L

/* Trace from the right front speaker to the right ear */

AUDIOTRACE FORWARD SPEAKER_FR EAR_R

/* Trace from the left rear speaker to the left ear */

AUDIOTRACE FORWARD SPEAKER_RL EAR_L

/* Trace from the right rear speaker to the right ear */

AUDIOTRACE FORWARD SPEAKER_RR EAR_R

/* Compute normalized echogram and convolve it with left front sample. */

AURALIZE FORWARD SPEAKER_FL EAR_L VIVALDI_FL VIVALDI_FAL 0 -1

/* Compute normalized echogram and convolve it with left rear sample. */

AURALIZE FORWARD SPEAKER_RL EAR_L VIVALDI_RL VIVALDI_RAL 0 -1

/* Compute normalized echogram and convolve it with right front sample. */

AURALIZE FORWARD SPEAKER_FR EAR_R VIVALDI_FR VIVALDI_FAR 0 -1

/* Compute normalized echogram and convolve it with right rear sample. */

AURALIZE FORWARD SPEAKER_RR EAR_R VIVALDI_RR VIVALDI_RAR 0 -1

/* Simple mix the two results coming from the speakers to the left. */

SAMPLE SIMPLE_MIX VIVALDI_FAL VIVALDI_RAL VIVALDI_AL

SAMPLE DELETE VIVALDI_FAL

SAMPLE DELETE VIVALDI_RAL

/* Simple mix the two results coming from the speakers to the right */

SAMPLE SIMPLE_MIX VIVALDI_FAR VIVALDI_RAR VIVALDI_AR

SAMPLE DELETE VIVALDI_FAR

SAMPLE DELETE VIVALDI_RAR

/* Write elapsed computing time */

SAY 'Computing time='TIME('E')' seconds.'

/* Flash screen */

SPECIAL_FX FLASH

/* Audio message */

SPECIAL_FX PLAY_SOUND END_SAMPLE

/* End 3DA² session */

QUIT

2.3.3 Complex Static Model

/******************************************************************

* *

* 3DA² Complex Static model. Yello with some environmental sounds *

* *

* Denis Tumpic 1995 *

* *

* External data: *

* COMPARTMENT_ENVIRONMENT *

* START_SAMPLE *

* YELLO_L, YELLO_R *

* BABY_CRYING, WOMAN_SHOUTING, WC_FLUSH *

* END_SAMPLE *

* *

* Temporary data: *

* YELLO_AL, YELLO_AR *

* BABY_CRYING_AL, BABY_CRYING_AR *

* WOMAN_SHOUTING_AL, WOMAN_SHOUTING_AR *

* WC_FLUSH_AL, WC_FLUSH_AR *

* *

* Computed data: *

* YELLO_A *

* *

******************************************************************/

/* Load and run 3DA² */

ADDRESS COMMAND "run 3DA²:3DA² REXX >NIL:"

/* Send messages to 3DA² */

ADDRESS "3DAUDIO.1"

/* Load an audio model */

LOAD DRAWING "COMPARTMENT_ENVIRONMENT"

/* Non excessive data format */

AUDIOTRACE SETTINGS SMALL_DATA MEMORY

/* Simple trace settings */

AUDIOTRACE SETTINGS ALL 50 1.0 15 0.0 0.0 0.0 0.0

/* Normalize the echograms */

ECHOGRAM SETTINGS LINEAR_NORMALIZE

/* Echogram sample frequency & data width */

AURALIZE SETTINGS DIRAC_SAMPLE_FREQUENCY 5400

AURALIZE SETTINGS DIRAC_SAMPLE_DATA_WIDTH 16

/* Resulting auralized sample frequency and data width */

AURALIZE SETTINGS SOUND_SAMPLE_FREQUENCY 32768

AURALIZE SETTINGS SOUND_SAMPLE_DATA_WIDTH 8

/* Flash screen */

SPECIAL_FX FLASH

/* Audio message */

SPECIAL_FX PLAY_SOUND START_SAMPLE

/* Reset clock */

CALL TIME('R')

/* Trace from the left speaker to the left ear */

AUDIOTRACE FORWARD SPEAKER_L EAR_L

/* Trace from the right speaker to the right ear */

AUDIOTRACE FORWARD SPEAKER_R EAR_R

/* Trace from the baby to the ears */

AUDIOTRACE FORWARD BABY_MOUTH EAR_L

AUDIOTRACE FORWARD BABY_MOUTH EAR_R

/* Trace from the woman to the ears */

AUDIOTRACE FORWARD WOMAN_MOUTH EAR_L

AUDIOTRACE FORWARD WOMAN_MOUTH EAR_R

/* Trace from WC to the ears */

AUDIOTRACE FORWARD TOILET EAR_L

AUDIOTRACE FORWARD TOILET EAR_R

/* Compute normalized echogram and auralization from the stereo */

AURALIZE FORWARD SPEAKER_L EAR_L YELLO_L YELLO_AL 0 -1

AURALIZE FORWARD SPEAKER_R EAR_R YELLO_R YELLO_AR 0 -1

/* Compute normalized echogram and auralization from the baby */

AURALIZE FORWARD BABY_MOUTH EAR_L BABY_CRYING BABY_CRYING_AL 0 -1

AURALIZE FORWARD BABY_MOUTH EAR_R BABY_CRYING BABY_CRYING_AR 0 -1

/* Compute normalized echogram and auralization from the woman */

AURALIZE FORWARD WOMAN_MOUTH EAR_L

WOMAN_SHOUTING WOMAN_SHOUTING_AL 0 -1

AURALIZE FORWARD WOMAN_MOUTH EAR_R

WOMAN_SHOUTING WOMAN_SHOUTING_AR 0 -1

/* Compute normalized echogram and auralization from the woman */

AURALIZE FORWARD TOILET EAR_L WC_FLUSH WC_FLUSH_AL 0 -1

AURALIZE FORWARD TOILET EAR_R WC_FLUSH WC_FLUSH_AR 0 -1

/* Mix baby cry into auralized Yello sample at every 20 seconds. */

samplen=SAMPLE LENGTH YELLO_AL

DO i= 0 to samplen by 20

SAMPLE OVER_MIX BABY_CRYING_AL YELLO_AL i

SAMPLE OVER_MIX BABY_CRYING_AR YELLO_AR i

END

/* Mix woman shouting into auralized Yello sample at every 80 seconds. */

samplen=SAMPLE LENGTH YELLO_AL

DO i= 0 to samplen by 80

SAMPLE OVER_MIX WOMAN_SHOUTING_AL YELLO_AL i

SAMPLE OVER_MIX WOMAN_SHOUTING_AR YELLO_AR i

END

/* Mix toilet flushing at the end */

samplenWC=SAMPLE LENGTH WC_FLUSH_AL

samplen=SAMPLE LENGTH YELLO_AL

SAMPLE OVER_MIX WC_FLUSH_AL YELLO_AL samplen-samplenWC

SAMPLE OVER_MIX WC_FLUSH_AR YELLO_AR samplen-samplenWC

/* The resulting auralized sample "YELLO_A" composed with Yello music as a base and diverse exterior sounds u.n.w. is now finished. Funny listening */

SAMPLE MAKE_STEREO YELLO_AL YELLO_AR YELLO_A

/* Delete all temporary data */

SAMPLE DELETE YELLO_AL

SAMPLE DELETE YELLO_AR

SAMPLE DELETE BABY_CRYING_AL

SAMPLE DELETE BABY_CRYING_AR

SAMPLE DELETE WOMAN_SHOUTING_AL

SAMPLE DELETE WOMAN_SHOUTING_AR

SAMPLE DELETE WC_FLUSH_AL

SAMPLE DELETE WC_FLUSH_AR

/* Write elapsed computing time */

SAY 'Computing time='TIME(\'E\')' seconds.'

/* Flash screen */

SPECIAL_FX FLASH

/* Audio message */

SPECIAL_FX PLAY_SOUND END_SAMPLE

/* End 3DA² session */

QUIT

2.3.4 Simple Dynamic Model

/******************************************************************

* *

* 3DA² Simple Dynamic model. Teacher in the classroom *

* *

* Denis Tumpic 1995 *

* *

* External data: *

* CLASS_ROOM_ENVIRONMENT, CLASS_ROOM_FLIGHTS *

* START_SAMPLE *

* LECTURE, PUPIL_1, PUPIL_2, PUPIL_3 *

* BUMBLEBEE *

* END_SAMPLE *

* *

* Temporary data: *

* BUMBLEBEE_AL, PUPIL_1_AL, PUPIL_2_AL, PUPIL_3_AL,LECTURE_AL *

* BUMBLEBEE_AR, PUPIL_1_AR, PUPIL_2_AR, PUPIL_3_AR,LECTURE_AR *

* *

* Computed data: *

* CLASSROOM_A *

* *

******************************************************************/

/* Load and run 3DA² */

ADDRESS COMMAND "run 3DA²:3DA² REXX >NIL:"

/* Send messages to 3DA² */

ADDRESS "3DAUDIO.1"

/* Load an audio model */

LOAD DRAWING "CLASS_ROOM_ENVIRONMENT"

/* Load some flight-paths */

LOAD FLIGHTS "CLASS_ROOM_FLIGHTS"

/* Map flights to objects in environment */

OBJECT FLIGHT TEACHER WALK_AROUND

OBJECT FLIGHT CATHERINE RUNNING_OUT

OBJECT FLIGHT DENNIS HUNTED

OBJECT FLIGHT MADELEINE CHASING

OBJECT FLIGHT BEE BUZZAROUND

/* Non excessive data format */

AUDIOTRACE SETTINGS SMALL_DATA MEMORY

/* Simple trace settings */

AUDIOTRACE SETTINGS ALL 15 1.0 25 0.0 0.0 0.0 0.0

/* Normalize the echograms */

ECHOGRAM SETTINGS LINEAR_NORMALIZE

/* Echogram sample frequency & data width */

AURALIZE SETTINGS DIRAC_SAMPLE_FREQUENCY 4096

AURALIZE SETTINGS DIRAC_SAMPLE_DATA_WIDTH 16

/* Resulting auralized sample frequency and data width */

AURALIZE SETTINGS SOUND_SAMPLE_FREQUENCY 19600

AURALIZE SETTINGS SOUND_SAMPLE_DATA_WIDTH 8

/* Flash screen */

SPECIAL_FX FLASH

/* Audio message */

SPECIAL_FX PLAY_SOUND START_SAMPLE

/* Reset clock */

CALL TIME('R')

d=0.01 /* Time displacement */

samplen=SAMPLE LENGTH "LECTURE"

DO i=0 to samplen

/* Trace from sources to the ears */

AUDIOTRACE FORWARD TEACHER EAR_L

AUDIOTRACE FORWARD TEACHER EAR_R

AUDIOTRACE FORWARD CATHERINE EAR_L

AUDIOTRACE FORWARD CATHERINE EAR_R

AUDIOTRACE FORWARD DENNIS EAR_L

AUDIOTRACE FORWARD DENNIS EAR_R

AUDIOTRACE FORWARD MADELEINE EAR_L

AUDIOTRACE FORWARD MADELEINE EAR_R

AUDIOTRACE FORWARD BEE EAR_L

AUDIOTRACE FORWARD BEE EAR_R

/*Compute normalized echograms and convolve it with samples*/

AURALIZE FORWARD TEACHER EAR_L LECTURE LECTURE_AL i i+d

AURALIZE FORWARD TEACHER EAR_R LECTURE LECTURE_AR i i+d

AURALIZE FORWARD CATHERINE EAR_L PUPIL_1 PUPIL_1_AL i i+d

AURALIZE FORWARD CATHERINE EAR_R PUPIL_1 PUPIL_1_AR i i+d

AURALIZE FORWARD DENNIS EAR_L PUPIL_2 PUPIL_2_AL i i+d

AURALIZE FORWARD DENNIS EAR_R PUPIL_2 PUPIL_2_AR i i+d

AURALIZE FORWARD MADELEINE EAR_L PUPIL_3 PUPIL_3_AL i i+d

AURALIZE FORWARD MADELEINE EAR_R PUPIL_3 PUPIL_3_AR i i+d

AURALIZE FORWARD BEE EAR_L BUMBLEBEE BUMBLEBEE_AL i i+d

AURALIZE FORWARD BEE EAR_R BUMBLEBEE BUMBLEBEE_AR i i+d

/* A step in time */

DISPLACEMENT TIME FORWARD d

END

/* All samples have the same length! */

SAMPLE SIMPLE_MIX BUMBLEBEE_AL PUPIL_3_AL PUPIL_3_AL

SAMPLE SIMPLE_MIX PUPIL_3_AL PUPIL_2_AL PUPIL_2_AL

SAMPLE SIMPLE_MIX PUPIL_2_AL PUPIL_1_AL PUPIL_1_AL

SAMPLE SIMPLE_MIX PUPIL_1_AL LECTURE_AL LECTURE_AL

SAMPLE SIMPLE_MIX BUMBLEBEE_AR PUPIL_3_AR PUPIL_3_AR

SAMPLE SIMPLE_MIX PUPIL_3_AR PUPIL_2_AR PUPIL_2_AR

SAMPLE SIMPLE_MIX PUPIL_2_AR PUPIL_1_AR PUPIL_1_AR

SAMPLE SIMPLE_MIX PUPIL_1_AR LECTURE_AR LECTURE_AR

/* This is one noisy classroom */

SAMPLE MAKE_STEREO LECTURE_AL LECTURE_AR CLASSROOM_A

/* Delete all temporary data */

SAMPLE DELETE BUMBLEBEE_AL

SAMPLE DELETE PUPIL_1_AL

SAMPLE DELETE PUPIL_2_AL

SAMPLE DELETE PUPIL_3_AL

SAMPLE DELETE LECTURE_AL

SAMPLE DELETE BUMBLEBEE_AR

SAMPLE DELETE PUPIL_1_AR

SAMPLE DELETE PUPIL_2_AR

SAMPLE DELETE PUPIL_3_AR

SAMPLE DELETE LECTURE_AR

/* Write elapsed computing time */

SAY 'Computing time='TIME(\'E\')' seconds.'

/* Flash screen */

SPECIAL_FX FLASH

/* Audio message */

SPECIAL_FX PLAY_SOUND END_SAMPLE

/* End 3DA² session */

QUIT

2.3.5 Complex Dynamic Model

/******************************************************************

* *

* 3DA² Complex Dynamic Model. Outdoors at a train station *

* *

* Denis Tumpic 1995 *

* *

* External data: *

* TRAIN_STATION_DYNAMIC_ENVIRONMENT *

* START_SAMPLE *

* TRAIN_WITH_HORN, AIRCRAFT, BOAT_HORNS, CONVERSATION *

* SPEAKER_VOICE, POLICE_HORN *

* END_SAMPLE *

* *

* Temporary data: *

* TWH_AL, AIR_AL, BOA_AL, POL_AL, CON_AL, SPE_AL *

* TWH_AR, AIR_AR, BOA_AR, POL_AR, CON_AR, SPE_AR *

* *

* Computed data: *

* TRAIN_STATION_A *

* *

******************************************************************/

/* Load and run 3DA² */

ADDRESS COMMAND "run 3DA²:3DA² REXX >NIL:"

ADDRESS "3DAUDIO.1" /* Send messages to 3DA² */

/* This model has a harbor with moving boats a police decampment, passing by aircraft two people conversing and a speaker voice informing from the public address system All this while YOU are wandering around at the train station. */

LOAD DRAWING "TRAIN_STATION_DYNAMIC_ENVIRONMENT"

/* Non excessive data format */

AUDIOTRACE SETTINGS SMALL_DATA MEMORY

/* Simple trace settings */

AUDIOTRACE SETTINGS ALL 10 0.5 15 0.0 0.0 0.0 0.0

/* Normalize the echograms */

ECHOGRAM SETTINGS LINEAR_NORMALIZE

/* Echogram sample frequency & data width */

AURALIZE SETTINGS DIRAC_SAMPLE_FREQUENCY 4096

AURALIZE SETTINGS DIRAC_SAMPLE_DATA_WIDTH 16

/* Resulting auralized sample frequency and data width */

AURALIZE SETTINGS SOUND_SAMPLE_FREQUENCY 19600

AURALIZE SETTINGS SOUND_SAMPLE_DATA_WIDTH 8

/* Flash screen */

SPECIAL_FX FLASH

/* Audio message */

SPECIAL_FX PLAY_SOUND START_SAMPLE

/* Reset clock */

CALL TIME('R')

/* All samples has the same length due to this the dependencies between objects are abandoned! */

samplen=SAMPLE LENGTH TRAIN

d=0.01 /* Time displacement */

DO i=0 to samplen

/* Trace from sources to the ears */

AUDIOTRACE FORWARD TRAIN EAR_L

AUDIOTRACE FORWARD TRAIN EAR_R

AUDIOTRACE FORWARD AIRCRAFT EAR_L

AUDIOTRACE FORWARD AIRCRAFT EAR_R

AUDIOTRACE FORWARD HARBOR_BOATS EAR_L

AUDIOTRACE FORWARD HARBOR_BOATS EAR_R

AUDIOTRACE FORWARD POLICE_CAR EAR_L

AUDIOTRACE FORWARD POLICE_CAR EAR_R

AUDIOTRACE FORWARD CONVERSATION EAR_L

AUDIOTRACE FORWARD CONVERSATION EAR_R

/* Public address system SPEAKER is static but */

/* due to the fact that the receiving ears are moving. */

AUDIOTRACE FORWARD SPEAKER_VOICE EAR_L

AUDIOTRACE FORWARD SPEAKER_VOICE EAR_R

/*Compute normalized echogram and convolve it with samples */

AURALIZE FORWARD TRAIN EAR_L TRAIN_WITH_HORN TWH_AL i i+d

AURALIZE FORWARD TRAIN EAR_R TRAIN_WITH_HORN TWH_AR i i+d

AURALIZE FORWARD AIRCRAFT EAR_L AIRCRAFT AIR_AL i i+d

AURALIZE FORWARD AIRCRAFT EAR_R AIRCRAFT AIR_AR i i+d

AURALIZE FORWARD HARBOR_BOATS EAR_L BOAT_HORNS BOA_AL i i+d

AURALIZE FORWARD HARBOR_BOATS EAR_R BOAT_HORNS BOA_AR i i+d

AURALIZE FORWARD POLICE_CAR EAR_L POLICE_HORN POL_AL i i+d

AURALIZE FORWARD POLICE_CAR EAR_R POLICE_HORN POL_AR i i+d

AURALIZE FORWARD CONVERSATION EAR_L CONVERSATION CON_AL i i+d

AURALIZE FORWARD CONVERSATION EAR_R CONVERSATION CON_AR i i+d

/* Public address system SPEAKER is static but */

/* due to the fact that the receiving ears are moving. */

AURALIZE FORWARD SPEAKER EAR_L SPEAKER_VOICE SPE_AL i i+d

AURALIZE FORWARD SPEAKER EAR_R SPEAKER_VOICE SPE_AR i i+d

/* A step in time */

DISPLACEMENT TIME FORWARD d

END

/* All samples has the same length! */

/* Final mixdown of the auralized parts. */

SAMPLE SIMPLE_MIX TWH_AL AIR_AL AIR_AL

SAMPLE SIMPLE_MIX AIR_AL BOA_AL BOA_AL

SAMPLE SIMPLE_MIX BOA_AL POL_AL POL_AL

SAMPLE SIMPLE_MIX POL_AL CON_AL CON_AL

SAMPLE SIMPLE_MIX CON_AL SPE_AL SPE_AL

SAMPLE SIMPLE_MIX TWH_AR AIR_AR AIR_AR

SAMPLE SIMPLE_MIX AIR_AR BOA_AR BOA_AR

SAMPLE SIMPLE_MIX BOA_AR POL_AR POL_AR

SAMPLE SIMPLE_MIX POL_AR CON_AR CON_AR

SAMPLE SIMPLE_MIX CON_AR SPE_AR SPE_AR

/* This is the resulting train station environment. */

/* Lots of calamity going on, I should say. */

SAMPLE MAKE_STEREO SPE_AL SPE_AR TRAIN_STATION_A

/* Delete all temporary data */

SAMPLE DELETE TWH_AL

SAMPLE DELETE AIR_AL

SAMPLE DELETE BOA_AL

SAMPLE DELETE POL_AL

SAMPLE DELETE CON_AL

SAMPLE DELETE SPE_AL

SAMPLE DELETE TWH_AR

SAMPLE DELETE AIR_AR

SAMPLE DELETE BOA_AR

SAMPLE DELETE POL_AR

SAMPLE DELETE CON_AR

SAMPLE DELETE SPE_AR

/* Write elapsed computing time */

SAY 'Computing time='TIME('E')' seconds.'

/* Flash screen */

SPECIAL_FX FLASH

/* Audio message */

SPECIAL_FX PLAY_SOUND END_SAMPLE

/* End 3DA² session */

QUIT

2.3.6 Simple Science Fiction Model

/******************************************************************

* *

* 3DA² Simple Sci-Fi model. Space and room *

* *

* Denis Tumpic 1995 *

* *

* External data: *

* ROOM-ENVIRONMENT *

* START-SAMPLE *

* LECTURE, SPEAKER *

* BUMBLEBEE *

* END-SAMPLE *

* *

* Temporary data: *

* BUMBLEBEE-AL, LECTURE-AL, SPEAKER-AL *

* BUMBLEBEE-AR, LECTURE-AR, SPEAKER-AR *

* *

* Computed data: *

* ROOM-A *

* *

******************************************************************/

/* Load and run 3DA² */

ADDRESS COMMAND "run 3DA²:3DA² REXX >NIL:"

/* Send messages to 3DA² */

ADDRESS "3DAUDIO.1"

/* Load an audio model */

LOAD DRAWING "ROOM-ENVIRONMENT"

/* Non excessive data format */

AUDIOTRACE SETTINGS SMALL-DATA MEMORY

/* Simple trace settings */

AUDIOTRACE SETTINGS ALL 23 1.0 25 0.0 0.0 0.0 0.0

/* Normalize the echograms */

ECHOGRAM SETTINGS LINEAR-NORMALIZE

/* Echogram sample frequency & data width */

AURALIZE SETTINGS DIRAC-SAMPLE-FREQUENCY 5120

AURALIZE SETTINGS DIRAC-SAMPLE-DATA-WIDTH 16

/* Resulting auralized sample frequency and data width */

AURALIZE SETTINGS SOUND-SAMPLE-FREQUENCY 19600

AURALIZE SETTINGS SOUND-SAMPLE-DATA-WIDTH 16

/* Flash screen */

SPECIAL-FX FLASH

/* Audio message */

SPECIAL-FX PLAY-SOUND START-SAMPLE

/* Reset clock */

CALL TIME('R')

d=0.01 /* Time displacement */

samplen=SAMPLE LENGTH "LECTURE"

f=100/samplen /*Smaller displacement than one */

g=0 / *Accumulated frequency displacement */

DO i=0 to samplen

/* Trace from sources to the ears */

AUDIOTRACE FORWARD TEACHER EAR-L

AUDIOTRACE FORWARD TEACHER EAR-R

AUDIOTRACE FORWARD SPEAKER EAR-L

AUDIOTRACE FORWARD SPEAKER EAR-R

AUDIOTRACE FORWARD BEE EAR-L

AUDIOTRACE FORWARD BEE EAR-R

/*Compute normalized echogram and convolve it with samples */

AURALIZE FORWARD TEACHER EAR-L LECTURE LECTURE-AL i i+d

AURALIZE FORWARD TEACHER EAR-R LECTURE LECTURE-AR i i+d

AURALIZE FORWARD PA-SYS EAR-L SPEAKER SPEAKER-AL i i+d

AURALIZE FORWARD PA-SYS EAR-R SPEAKER SPEAKER-AR i i+d

AURALIZE FORWARD BEE EAR-L BUMBLEBEE BUMBLEBEE-AL i i+d

AURALIZE FORWARD BEE EAR-R BUMBLEBEE BUMBLEBEE-AR i i+d

/* A step in time */

DISPLACEMENT TIME FORWARD d

/* Some non natural events */

DISPLACEMENT OBJECT RESIZE ROOM 0.1 0.2 0.3

g=g+f

MATERIAL CHANGE FREQUENCY WALLS g g g g g g g g g g g

END

/* All samples has the same length! */

SAMPLE SIMPLE-MIX BUMBLEBEE-AL SPEAKER-AL SPEAKER-AL

SAMPLE SIMPLE-MIX SPEAKER-AL SPEAKER-AL LECTURE-AL

SAMPLE SIMPLE-MIX BUMBLEBEE-AR SPEAKER-AR SPEAKER-AR

SAMPLE SIMPLE-MIX SPEAKER-AR SPEAKER-AR LECTURE-AR

/* This is one weird classroom */

SAMPLE MAKE-STEREO LECTURE-AL LECTURE-AR ROOM-A

/* Delete all temporary data */

SAMPLE DELETE BUMBLEBEE-AL

SAMPLE DELETE SPEAKER-AL

SAMPLE DELETE LECTURE-AL

SAMPLE DELETE BUMBLEBEE-AR

SAMPLE DELETE SPEAKER-AR

SAMPLE DELETE LECTURE-AR

/* Write elapsed computing time */

SAY 'Computing time='TIME(\'E\')' seconds.'

/* Flash screen */

SPECIAL-FX FLASH

/* Audio message */

SPECIAL-FX PLAY-SOUND END-SAMPLE

/* End 3DA² session */

QUIT

2.3.7 Complex Science Fiction Model

/******************************************************************

* *

* 3DA² Complex SCI-FI Model. Terminator De Terminatei *

* *

* Denis Tumpic 1995 *

* *

* External data: *

* TERMINUS-ENVIRONMENT *

* START-SAMPLE *

* SPACE-SHIP, METEORITES, IMPACTS, BLASTINGS *

* NARRATOR-VOICE, BACKGROUND-SPACE-SOUND-A *

* END-SAMPLE *

* *

* Temporary data: *

* SS-AL, MET-AL, IMP-AL, BLA-AL, NARR-AL *

* SS-AR, MET-AR, IMP-AR, BLA-AR, NARR-AR *

* *

* Computed data: *

* TERMINUS-A *

* *

******************************************************************/

/* Load and run 3DA² */

ADDRESS COMMAND "run 3DA²:3DA² REXX >NIL:"

/* Send messages to 3DA² */

ADDRESS "3DAUDIO.1"

/* Load an audio model */

/* This model has a lunar space station with atmosphere*/

/* There are meteorites falling and smashing the surface */

/* The narrator is telling what is happening in this */

/* Space War I */

LOAD DRAWING "TERMINUS-ENVIRONMENT"

/* Non excessive data format */

AUDIOTRACE SETTINGS SMALL-DATA MEMORY

/* Simple trace settings */

AUDIOTRACE SETTINGS ALL 18 0.9 25 0.0 0.0 0.0 0.0

/* Normalize the echograms */

ECHOGRAM SETTINGS LINEAR-NORMALIZE

/* Echogram sample frequency & data width */

AURALIZE SETTINGS DIRAC-SAMPLE-FREQUENCY 2048

AURALIZE SETTINGS DIRAC-SAMPLE-DATA-WIDTH 8

/* Resulting auralized sample frequency and data width */

AURALIZE SETTINGS SOUND-SAMPLE-FREQUENCY 16384

AURALIZE SETTINGS SOUND-SAMPLE-DATA-WIDTH 8

/* Flash screen */

SPECIAL-FX FLASH

/* Audio message */

SPECIAL-FX PLAY-SOUND START-SAMPLE

/* Reset clock */

CALL TIME('R')

d=0.01 /* Time displacement */

/* All samples has the same length This way dependencies are abandoned! */

samplen=SAMPLE LENGTH TRAIN

DO i=0 to samplen

/* Trace from sources to the ears */

AUDIOTRACE FORWARD SPACE-SHIP EAR-L

AUDIOTRACE FORWARD SPACE-SHIP EAR-R

AUDIOTRACE FORWARD METEORITES EAR-L

AUDIOTRACE FORWARD METEORITES EAR-R

AUDIOTRACE FORWARD IMPACTS EAR-L

AUDIOTRACE FORWARD IMPACTS EAR-R

AUDIOTRACE FORWARD BLASTINGS EAR-L

AUDIOTRACE FORWARD BLASTINGS EAR-R

/*Compute normalized echogram and convolve it with samples */

AURALIZE FORWARD SPACE-SHIP EAR-L SPACE-SHIP SS-AL i i+d

AURALIZE FORWARD SPACE-SHIP EAR-R SPACE-SHIP SS-AR i i+d

AURALIZE FORWARD METEORITES EAR-L METEORITES MET-AL i i+d

AURALIZE FORWARD METEORITES EAR-R METEORITES MET-AR i i+d

AURALIZE FORWARD IMPACTS EAR-L IMPACTS IMP-AL i i+d

AURALIZE FORWARD IMPACTS EAR-R IMPACTS IMP-AR i i+d

AURALIZE FORWARD BLASTINGS EAR-L BLASTINGS BLA-AL i i+d

AURALIZE FORWARD BLASTINGS EAR-R BLASTINGS BLA-AR i i+d

/* A step in time */

DISPLACEMENT TIME FORWARD d

/* No non natural displacements */

/* because non normal samples. Please listen to */

/* the associated samples. */

/* The auralizable samples are event stochastic samples */

END

/* All samples has the same length! */

/* Final mixdown of the auralized parts */

SAMPLE SIMPLE-MIX SS-AL MET-AL MET-AL

SAMPLE SIMPLE-MIX MET-AL IMP-AL IMP-AL

SAMPLE SIMPLE-MIX IMP-AL IMP-AL BLA-AL

SAMPLE SIMPLE-MIX SS-AR MET-AR MET-AR

SAMPLE SIMPLE-MIX MET-AR IMP-AR IMP-AR

SAMPLE SIMPLE-MIX IMP-AR IMP-AR BLA-AR

/* Monaural narrator voice */

SAMPLE SIMPLE-MIX NARRATOR-VOICE BLA-AL BLA-AL

SAMPLE SIMPLE-MIX NARRATOR-VOICE BLA-AR BLA-AR

/* This is the resulting Terminus environment */

SAMPLE MAKE-STEREO BLA-AL BLA-AR TERMINUS-A

SAMPLE STEREO-MIX BACKGROUND-SPACE-SOUND-A TERMINUS-A TERMINUS-A

/* Delete all temporary data */

SAMPLE DELETE SS-AL

SAMPLE DELETE MET-AL

SAMPLE DELETE IMP-AL

SAMPLE DELETE BLA-AL

SAMPLE DELETE SS-AR

SAMPLE DELETE MET-AR

SAMPLE DELETE IMP-AR

SAMPLE DELETE BLA-AR

/* Write elapsed computing time */

SAY 'Computing time='TIME(\'E\')' seconds.'

/* Flash screen */

SPECIAL-FX FLASH

/* Audio message */

SPECIAL-FX PLAY-SOUND END-SAMPLE

/* End 3DA² session */

QUIT

Appendix A: Data Files

"In dubiis non est agendum"

Each of the 3DA² data types can be edited in a normal text editor. All though it isn't recommended that a novice user should mess in these files, an expert user could have some fun with them. Amongst other things, creating new primitive objects. The following lists shows the file formats associated with 3DA² software.

WARNING!

Users that input false data, could make the program calculate very strange things. No responsibility taken if the computer goes berserk, or some "new" acoustical phenomena are encountered.

A.1 Drawing File Form

File form:

$3D-Audio_DrawingHeader

# <number of objects> <Magnification-factor 1-10000>

<Focal-factor 1-500> <Measure: 0 = Meter, 1 = Feet, 2 = Off>

<Grid Size 0-11 ( 0 = Big , 11 = Small )>

$<Object #n model name>

#<Origo X_O, Y_O, Z_O>

<Eigenvectors E_X, E_Y, E_Z><Size S_X, S_Y, S_Z>

$miniOBJHYES

Remark: $miniOBJHNO if no object data exist. Skip to next.

$ <Object#m primitive name>

# <Number of primitive objects>

# <Special primitive #> <Eight metric coordinates>

0: Tetrahedra; 8 coordinates